filmov

tv

How to Make 2500 HTTP Requests in 2 Seconds with Async & Await

Показать описание

This is a comparison about how to use Async and Asynio with AIOHttp and Python vs using threads and concurrent futures to best understand how we could make several thousand http requests in just a few seconds. Learning how to do this and understanding how it works will help you when it comes to running your own servers and web services, and stress testing any API environments you offer.

Support Me:

-------------------------------------

Disclaimer: These are affiliate links and as an Amazon Associate I earn from qualifying purchases

-------------------------------------

How to Make 2500 HTTP Requests in 2 Seconds with Async & Await

You Should Make Your OWN HTTP Clients for APIs (Shopify x Python)

How To Best Make HTTP Requests In React JS

How To Make Money Online Fast And Free In 2024

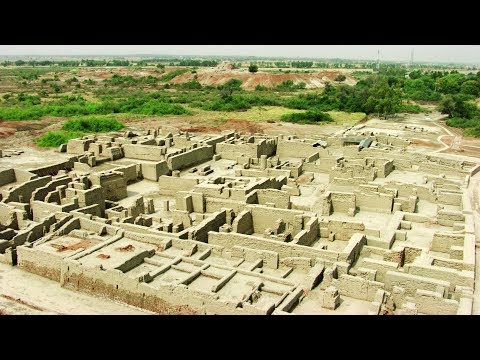

Mohenjo Daro 101 | National Geographic

How To Make A HTTP Request With Axios #shorts

Invest 2500 rupees to get 2.5 Lakhs as a Passive Income per Month | Secrets of SWP | Mutual fund |

http://www.PushButtonInvestor.com Shows You How to Make $2500-$5000 PER MONTH!

Dashers Are MAKING $2500 Every WEEK Using THIS SIMPLE METHOD! (DoorDash Driver Earnings)

I Live Better In Thailand Than I Did In The U.S. - Here's How Much It Costs | Relocated

A Simple Trick to Get Into Your Car If You Locked Your Keys Inside

Make Http Requests in Go Language

The Mummification of Seti I | Ultimate Treasure Countdown

How to Make an Ancient Sword FROM SCRATCH: the Egyptian KHOPESH

How to make an http request from a MySQL database?

60-Second Video Tips: The Best Way to Shuck an Oyster

How to Make a DIY Air Filter | Ask This Old House

How to - Preform an Oil Pressure Test // Oil Pressure Tester

How much your Social Security benefits will be if you make $30,000, $35,000 or $40,000

Science: Secrets to Making & Baking the Best Gluten-Free Pizza Dough

Clear Water Maintenance for Small Pools up to 5,000 Gallons: Clorox Pool&Spa

The EASIEST Way To Make Money Online In 2018 As A BEGINNER With NO MONEY

Diesel Engine Exhaust Braking | Ford How-To | Ford

Configurable Daytime Running Lamps | Ford How-To | Ford

Комментарии

0:04:27

0:04:27

0:12:07

0:12:07

0:00:33

0:00:33

0:11:26

0:11:26

0:03:15

0:03:15

0:00:59

0:00:59

0:11:51

0:11:51

0:09:57

0:09:57

0:11:04

0:11:04

0:08:49

0:08:49

0:08:26

0:08:26

0:14:07

0:14:07

0:03:33

0:03:33

0:14:04

0:14:04

0:02:08

0:02:08

0:01:25

0:01:25

0:07:41

0:07:41

0:01:47

0:01:47

0:02:31

0:02:31

0:04:02

0:04:02

0:02:09

0:02:09

0:17:00

0:17:00

0:01:52

0:01:52

0:01:18

0:01:18