filmov

tv

The First Neural Networks

Показать описание

The First Neural Networks

But what is a neural network? | Chapter 1, Deep learning

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

Explained In A Minute: Neural Networks

I Built a Neural Network from Scratch

Python Tutorial: Your first neural network

How to Build Your First Neural Network in Python and Keras

The Essential Main Ideas of Neural Networks

AI Foundations: Intro to Recurrent Neural Networks

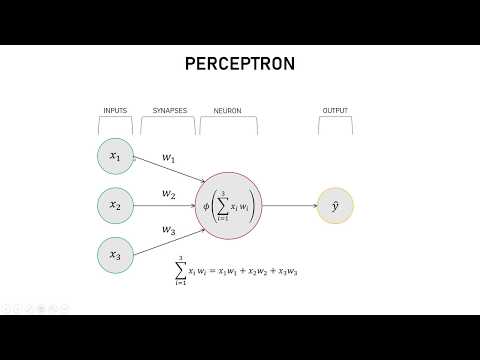

Perceptron | Neural Networks

How to Create a Neural Network (and Train it to Identify Doodles)

Training my First NEURAL NETWORK in C++ - AI Devlog

Beginner Intro to Neural Networks 4: First Neural Network in Python

A friendly introduction to Deep Learning and Neural Networks

The Neural Network, A Visual Introduction

Building a neural network FROM SCRATCH (no Tensorflow/Pytorch, just numpy & math)

Neural Networks Explained from Scratch using Python

Future Computers Will Be Radically Different (Analog Computing)

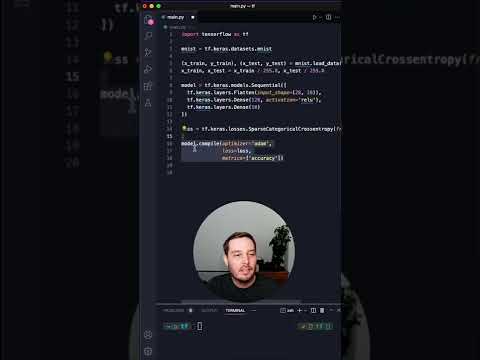

How to create your FIRST NEURAL NETWORK with TensorFlow!

Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

Neural Networks explained in 60 seconds!

Create a Simple Neural Network in Python from Scratch

Artificial neural networks (ANN) - explained super simple

First Ancient Neural Network in C

Комментарии

0:18:52

0:18:52

0:18:40

0:18:40

0:05:45

0:05:45

0:01:04

0:01:04

0:09:15

0:09:15

0:04:06

0:04:06

0:18:01

0:18:01

0:18:54

0:18:54

1:10:12

1:10:12

0:08:47

0:08:47

0:54:51

0:54:51

0:07:19

0:07:19

0:05:52

0:05:52

0:33:20

0:33:20

0:13:52

0:13:52

0:31:28

0:31:28

0:17:38

0:17:38

0:21:42

0:21:42

0:00:50

0:00:50

0:36:15

0:36:15

0:01:00

0:01:00

0:14:15

0:14:15

0:26:14

0:26:14

2:00:48

2:00:48