filmov

tv

Python Tutorial: Your first neural network

Показать описание

---

It's time to start building some neural networks!

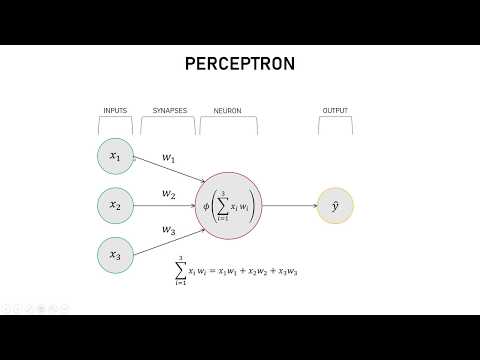

A neural network is a machine learning algorithm with the training data being the input to the input layer and the predicted value the value at the output layer.

Each connection from one neuron to another has an associated weight w. Each neuron, except the input layer which just holds the input value, also has an extra weight we call the bias weight, b. During feed-forward our input gets transformed by weight multiplications and additions at each layer, the output of each neuron can also get transformed by the application of what's called an activation function.

Learning in neural networks consists of tuning the weights or parameters to give the desired output. One way of achieving this is by using the famous gradient descent algorithm, and applying weight updates incrementally via a process known as back-propagation. That was a lot of theory! The code in Keras is much simpler as we will see now.

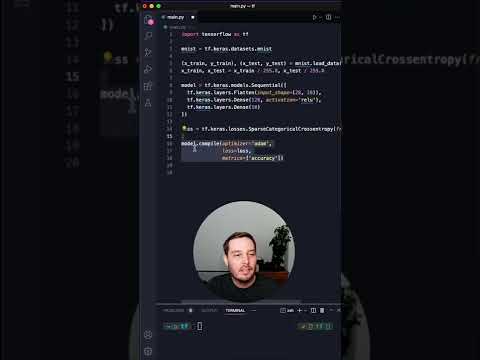

Keras allows you to build models in two different ways; using either the Functional API or the Sequential API. We will focus on the Sequential API. This is a simple, yet very powerful way of building neural networks that will get you covered for most use cases. With the sequential API you're essentially building a model as a stack of layers. You can start with an input layer.

Add a couple of hidden layers. And finally end your model by adding an output layer. Let's go through a code example.

To create a simple neural network we'd do the following:

In order to add an activation function to our layers we can make use of the activation argument.

For instance, this is how we'd add a ReLU activation to our hidden layer. Don't worry about the choice of activation functions,that will be covered later on in the course.

Once we've created our model we can call the summary() method on it. This displays a table with 3 columns: The first with the layers name and type, the second with the shape of the outputs produced by each layer and the third containing the number of parameters, those are the weights including the bias weight of each neuron in the layer.

When the input layer is defined via the input_shape parameter, as we did before, it is not shown as a layer in the summary but it's included in the layer where it was defined, in this case the dense_3 layer.

That's why we see that this layer has 8 parameters: 6 parameters or weights come from the connections of the 3 input neurons to the 2 neurons in this layer, the missing 2 parameters come from the bias weights, b0 and b1, 1 per each neuron in the hidden layer.

These add up to 8 different parameters.

Just what we had in our summary. It's time to code! Let's build some networks.

#Python #PythonTutorial #DataCamp #Keras #neural #network

0:04:06

0:04:06

0:18:01

0:18:01

1:11:59

1:11:59

0:00:50

0:00:50

0:09:15

0:09:15

0:19:13

0:19:13

0:11:33

0:11:33

0:16:59

0:16:59

7:22:17

7:22:17

0:54:51

0:54:51

0:08:32

0:08:32

0:27:55

0:27:55

0:26:02

0:26:02

0:31:28

0:31:28

0:30:57

0:30:57

0:01:00

0:01:00

0:26:05

0:26:05

0:14:15

0:14:15

0:13:12

0:13:12

0:12:10

0:12:10

0:06:16

0:06:16

0:58:01

0:58:01

0:13:46

0:13:46

0:18:40

0:18:40