filmov

tv

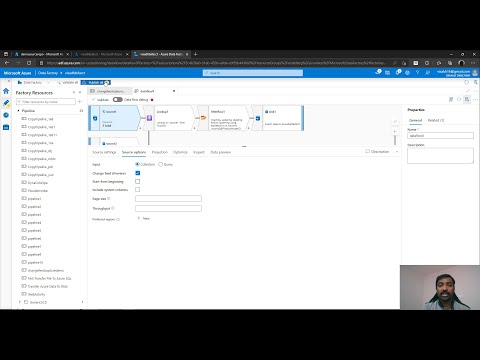

Azure Data Factory - Incremental Load or Delta Load for Multiple SQL Tables in ADF

Показать описание

Azure Data Factory - Copy multiple SQL tables incrementally using a watermark table (Delta Load) ADF

Here we are doing incremental copy of multiple SQL Tables from one Database to another Database.

Please refer the below video Step by Step explanation of pipeline for a Single SQL Database:

We connected to SQL Database from On-Premise Environment for this video. To know how to setup Self Hosted Integrated Runtime,

Please refer:

Check out my Udemy Course on Building an end to end project on Azure Data Factory and Azure Synapse Analytics

Udemy course with Coupon link :

My New course on Complete Azure Synapse Analytics:

Limited time to enrol this course with above Link for can access the course with 50% OFF!!!

============================

============================

Here we are doing incremental copy of multiple SQL Tables from one Database to another Database.

Please refer the below video Step by Step explanation of pipeline for a Single SQL Database:

We connected to SQL Database from On-Premise Environment for this video. To know how to setup Self Hosted Integrated Runtime,

Please refer:

Check out my Udemy Course on Building an end to end project on Azure Data Factory and Azure Synapse Analytics

Udemy course with Coupon link :

My New course on Complete Azure Synapse Analytics:

Limited time to enrol this course with above Link for can access the course with 50% OFF!!!

============================

============================

8.2 Incremental data load in Azure Data Factory #AzureDataEngineering #AzureETL #ADF

3. Incrementally copy new and changed files based on Last Modified Date in Azure Data Factory

#60.Azure Data Factory - Incremental Data Load using Lookup\Conditional Split

Azure Data Factory - Incremental Data Copy with Parameters

Azure Data Factory - Incremental load or Delta load using a watermark Table

Azure data factory || Incremental Load or Delta load from SQL to File Storage

Azure data engineering | learn incremental load pipeline in adf

Azure Data Factory - Incremental Load or Delta Load for Multiple SQL Tables in ADF

Incremental data load in Azure Data Factory

Azure data Engineer project | Incremental data load in Azure Data Factory

Incremental data loading with Azure Data Factory and Azure SQL DB

8.1 Incremental data load in Azure Data Factory #AzureDataEngineering #AzureETL #ADF

5.Multi tables Incremental load from Azure SQL to BLOB Storage #azuredatafactory #azuredataengineer

Azure Data Factory - Incremental Copy of Multiple Tables

What is Tumbling Window Trigger? Implementing Incremental Load in Azure Data Factory | ADF Tutorials

Azure Data Factory - Incremental data copy Copy files that are Created / Modified today

Data engineering Project : Incremental loading on azure !!

Azure Data Factory | Dynamic Incremental Load

Easily capture source changes with ADF

104. CDC (change data capture) Resource in Azure Data Factory | #adf #azuredatafactory #datafactory

48. How to Copy data from REST API to Storage account using Azure Data Factory | #adf #datafactory

Azure Data Factory - Incremental Load From SQL Managed Instance to Lake using Change data Capture

Capture Changed Data in Azure Data Factory - Handling deletions in Incremental Loads - 3 simple ways

Azure Data Factory (ADF) Quick Tip: Incremental Data Refresh in Cosmos DB – Change Feed Capture

Комментарии

0:11:34

0:11:34

0:09:00

0:09:00

0:15:45

0:15:45

0:23:15

0:23:15

0:17:03

0:17:03

0:30:09

0:30:09

0:12:15

0:12:15

0:20:43

0:20:43

0:07:16

0:07:16

0:14:56

0:14:56

0:03:20

0:03:20

0:10:22

0:10:22

0:11:27

0:11:27

0:11:34

0:11:34

0:20:42

0:20:42

0:08:57

0:08:57

0:06:51

0:06:51

0:15:38

0:15:38

0:06:22

0:06:22

0:24:44

0:24:44

0:10:18

0:10:18

0:29:31

0:29:31

0:26:10

0:26:10

0:24:37

0:24:37