filmov

tv

3. Incrementally copy new and changed files based on Last Modified Date in Azure Data Factory

Показать описание

In this video, I discussed about Incrementally copy new and changed files based on Last modified date in Azure data factory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

3. Incrementally copy new and changed files based on Last Modified Date in Azure Data Factory

Incrementally Copy New and Changed Files Only between Containers in Azure Data Factory -ADF Tutorial

8.2 Incremental data load in Azure Data Factory #AzureDataEngineering #AzureETL #ADF

12. How to copy latest file or last modified file of ADLS folder using ADF pipeline

Azure Data Factory-Incrementally copy files based on time partitioned file name using Copy Data tool

Incrementally Load Files in Azure Data Factory by Looking Up Latest Modified Date in Destination

Azure Data Factory-Incrementally copy files based on LastModifiedDate by using the Copy Data tool

18. Copy multiple tables in bulk by using Azure Data Factory

Mathematical Typesetting in LaTeX || Emmanuel Dansu || Johnny Ebimo

20. Get Latest File from Folder and Process it in Azure Data Factory

25 Incrementally copy new and changed files based on Last Modified Date in ADF

Azure data factory || Incremental Load or Delta load from SQL to File Storage

7 Incrementally Extract Files in ADF #AzureDataEngineering #DatabricksETL #AzureETL #ADF

Azure Data Factory - Incrementally load data from Azure SQL to Azure Data Lake using Watermark

How to Create Increments in Excel : Microsoft Excel Tips

#60.Azure Data Factory - Incremental Data Load using Lookup\Conditional Split

Azure Data Factory | Copy multiple tables in Bulk with Lookup & ForEach

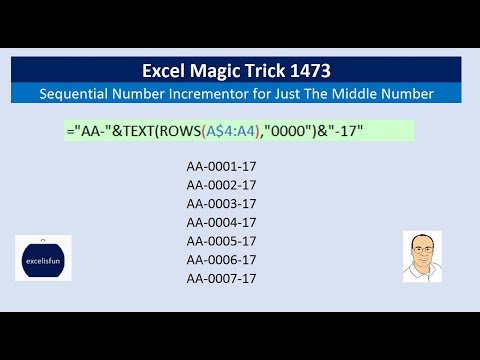

Excel Magic Trick 1473: Sequential Number Incrementor for Just The Middle Number: AA-0009-17

13. Add additional columns during copy in Azure Data Factory

Configuring Incremental Refresh in Power BI

How to Merge Multiple CSV Files into Single CSV File by using Copy Activity with Same Columns in ADF

Google Sheets Import Range | Multiple Sheets | Import Data | With Query Function

2. Get File Names from Source Folder Dynamically in Azure Data Factory

How RMAN incrementally updated backup works

Комментарии

0:09:00

0:09:00

0:25:47

0:25:47

0:11:34

0:11:34

0:11:00

0:11:00

0:14:05

0:14:05

0:11:15

0:11:15

0:12:21

0:12:21

0:18:27

0:18:27

2:44:41

2:44:41

0:21:11

0:21:11

1:36:13

1:36:13

0:30:09

0:30:09

0:06:45

0:06:45

0:26:17

0:26:17

0:01:42

0:01:42

0:15:45

0:15:45

0:23:16

0:23:16

0:07:41

0:07:41

0:11:32

0:11:32

0:16:01

0:16:01

0:11:19

0:11:19

0:10:36

0:10:36

0:18:18

0:18:18

0:31:47

0:31:47