filmov

tv

Moore’s Law is So Back.

Показать описание

According to Moore’s law, the number of transistors on a microchip should double every year. Two years ago, Nvidia CEO Jensen Huang said that the law was “dead” because we're hitting physical limits for the miniaturization of transistors. Now, though, he’s reversed that claim, instead predicting that we're about to see a “Hyper” Moore’s law. Let’s take a look.

🔗 Join this channel to get access to perks ➜

#science #sciencenews #tech #technews

Moore’s Law is So Back.

Moore's Law is Dead — Welcome to Light Speed Computers

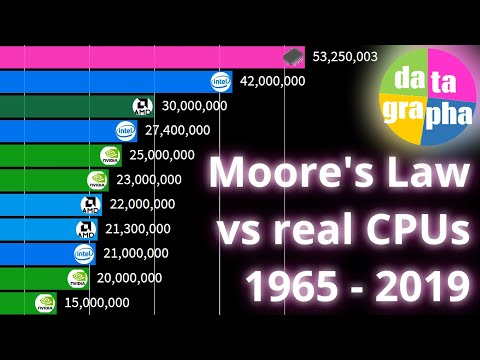

Moore's Law graphed vs real CPUs & GPUs 1965 - 2019

Moore's Law Misapplied

Is Moore's Law Finally Dead?

💻The Death of Moore's Law & the Future of Computing (Computer Science Debate)

This New Technology will Keep Moore’s Law Going!

Is Moore's Law Broken?

MOORE'S LAW is it coming to END #moore's law #technology #tech #processor #computing

Moore's Law - Defined

Moore's law is a series of revolutions | Erik Brynjolfsson and Lex Fridman

Is Moore's Law Really Dead? If So, What Does it Mean for Investors?

Moore’s Law Ending -Joe Rogan

Is Moore's Law Dead? in 2022

Moore's Law

The Extreme Physics Pushing Moore’s Law to the Next Level

Happy Gilmore 2 | Official Teaser Trailer | Netflix

What is the End of Moore's Law?

Moore's Law and the Singularity

The Future of Moore's Law

Jim Keller: Moore’s Law is Not Dead

The History of Moore's Law (My Predictions) | Computer Science

SBNM 5450 Chapter 5: Moore's Law and More

Matthew McConaughey and Hugh Grant Swap Iconic Movie Lines

Комментарии

0:07:48

0:07:48

0:20:27

0:20:27

0:04:41

0:04:41

0:04:12

0:04:12

0:21:27

0:21:27

0:00:41

0:00:41

0:19:10

0:19:10

0:07:52

0:07:52

0:00:41

0:00:41

0:00:35

0:00:35

0:05:46

0:05:46

0:08:19

0:08:19

0:00:28

0:00:28

0:17:04

0:17:04

0:03:21

0:03:21

0:11:52

0:11:52

0:01:46

0:01:46

0:06:33

0:06:33

0:00:55

0:00:55

0:12:33

0:12:33

1:04:53

1:04:53

0:09:47

0:09:47

1:00:24

1:00:24

0:06:56

0:06:56