filmov

tv

DPO - Part1 - Direct Preference Optimization Paper Explanation | DPO an alternative to RLHF??

Показать описание

In this video, I have explained in detail the DPO paper which proposes a method that can serve as an alternative to RLHF. DPO is a computationally efficient method that calculates the log probabilities of preferred and dispreferred completions under a model and optimizes its params in a way to increase the likelihood of preferred responses and decrease those of dispreferred to align the model with human preferences without a reward model unlike in RLHF algorithms based on PPO.

For any discussions, you can connect with me via the following social links:

Feel free to join the telegram group for discussions using the following link

The code will be available in the following repository:

Links of playlists of the channel:

-~-~~-~~~-~~-~-

Watch: "LoRA - Low-rank Adaption of Large Language Models Paper In-depth Explanation | NLP Research Papers"

-~-~~-~~~-~~-~-

For any discussions, you can connect with me via the following social links:

Feel free to join the telegram group for discussions using the following link

The code will be available in the following repository:

Links of playlists of the channel:

-~-~~-~~~-~~-~-

Watch: "LoRA - Low-rank Adaption of Large Language Models Paper In-depth Explanation | NLP Research Papers"

-~-~~-~~~-~~-~-

DPO - Part1 - Direct Preference Optimization Paper Explanation | DPO an alternative to RLHF??

Direct Preference Optimization (DPO) - How to fine-tune LLMs directly without reinforcement learning

Direct Preference Optimization: Forget RLHF (PPO)

Direct Preference Optimization (DPO): Your Language Model is Secretly a Reward Model Explained

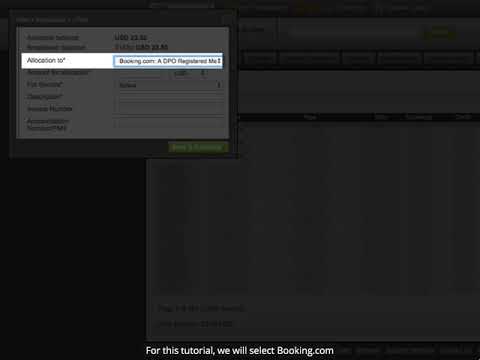

Introduction to DPO payment gateway - Part 1

Towards Reliable Use of Large Language Models: Better Detection, Consistency, and Instruction-Tuning

Video: Jubilating Policeman Falls Off Moving Van In Lagos

PR-453: Direct Preference Optimization

DPO - Part2 - Direct Preference Optimization Implementation using TRL | DPO an alternative to RLHF??

How To Pay Booking.com via Easy Payments - DPO Dashboard

DPO as a Service - Framework

PSL+: How To Import From DPO

How Does A Direct Listing Work?

How to Code RLHF on LLama2 w/ LoRA, 4-bit, TRL, DPO

Depot

Interview with Eran Feinstein, CEO & Co-Founder of DPO Group.

The Journey Of DPO: Africa's Favorite Payment Service Provider

Training Large Language Models: Practices and Research Questions

How To Setup DPO Group Payment Method

Sundirect new Dpo pack 1 | plan list | Full details 2019 | Tk Royal tech

What The Food?! Episode 3 Part 1: New Product Consultant in Food Industry

Pakistan education system what a beautiful environment WOW🤣🤣

How To Apply For Direct DSP |Direct DSP Appointment| DSP Sindh Police #DirectDsp #DspJobs #MrApps

Three ways to become an Assistant commissioner in Pakistan #assistancomissioner #AC #ACprotocol

Комментарии

0:53:03

0:53:03

0:21:15

0:21:15

0:09:10

0:09:10

0:36:25

0:36:25

0:07:11

0:07:11

1:03:55

1:03:55

0:00:27

0:00:27

0:37:12

0:37:12

0:41:21

0:41:21

0:02:22

0:02:22

0:10:43

0:10:43

0:01:44

0:01:44

0:33:00

0:33:00

0:36:14

0:36:14

0:01:17

0:01:17

0:16:48

0:16:48

0:05:48

0:05:48

1:38:03

1:38:03

0:05:37

0:05:37

0:03:17

0:03:17

0:04:13

0:04:13

0:00:16

0:00:16

0:02:01

0:02:01

0:02:02

0:02:02