filmov

tv

2. Get File Names from Source Folder Dynamically in Azure Data Factory

Показать описание

In this video, I discussed about Getting File Names Dynamically from Source folder in Azure Data Factory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

Link for Azure Functions Play list:

Link for Azure Basics Play list:

Link for Azure Data factory Play list:

#Azure #ADF #AzureDataFactory

2. Get File Names from Source Folder Dynamically in Azure Data Factory

How To List All File Names from A Folder into Excel Using Formula

Copy list of filenames from folder into Excel (Windows)

Export list of files, folders including subfolders to a txt file from command line

copyaspath | Copy list of File name into excel | copy multi pdf name to excel

How To Copy File Names From Folder To Excel Spreadsheet

How To Get A List Of File Names From A Folder And All Subfolders

Get a List of File Names from Folders & Sub-folders in Excel (using Power Query)

Foundation Paper 2: BL | Topic: Ch4: The Indian Partnership Act, 1932...| Session 1 | 30 Nov, 2024

Export File Names to Excel, 2024. Export File Names from folder to a Text File.

Copy the Filenames into an Excel worksheet or a Text file

Get File Name Without Extension in UiPath

Get File Name in UiPath

List of filenames from folder into Excel

How To Extract Files From Multiple Folders

How to Import Multiple File Names into Cells in Excel

How to Get the List of File Names in a Folder in Excel (without VBA)

Folders & files in VS Code made super fast like this!

Get file names from a folder #shorts #excel

071 Batch extract all file names in the folder in excel #shorts #youtubeshorts

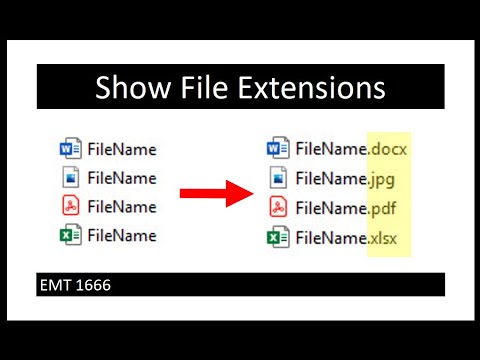

Show File Extensions (File Types). EMT 1666

Get All File Names in a Directory using VBA

Excel VBA Get File Names in Spreadsheet

Easiest way to COMBINE Multiple Excel Files into ONE (Append data from Folder)

Комментарии

0:18:18

0:18:18

0:05:36

0:05:36

0:03:16

0:03:16

0:00:24

0:00:24

0:00:56

0:00:56

0:00:40

0:00:40

0:03:38

0:03:38

0:06:28

0:06:28

2:06:02

2:06:02

0:10:23

0:10:23

0:00:30

0:00:30

0:00:54

0:00:54

0:00:39

0:00:39

0:04:22

0:04:22

0:00:36

0:00:36

0:02:59

0:02:59

0:08:13

0:08:13

0:00:29

0:00:29

0:00:47

0:00:47

0:00:33

0:00:33

0:01:11

0:01:11

0:04:44

0:04:44

0:08:57

0:08:57

0:10:29

0:10:29