filmov

tv

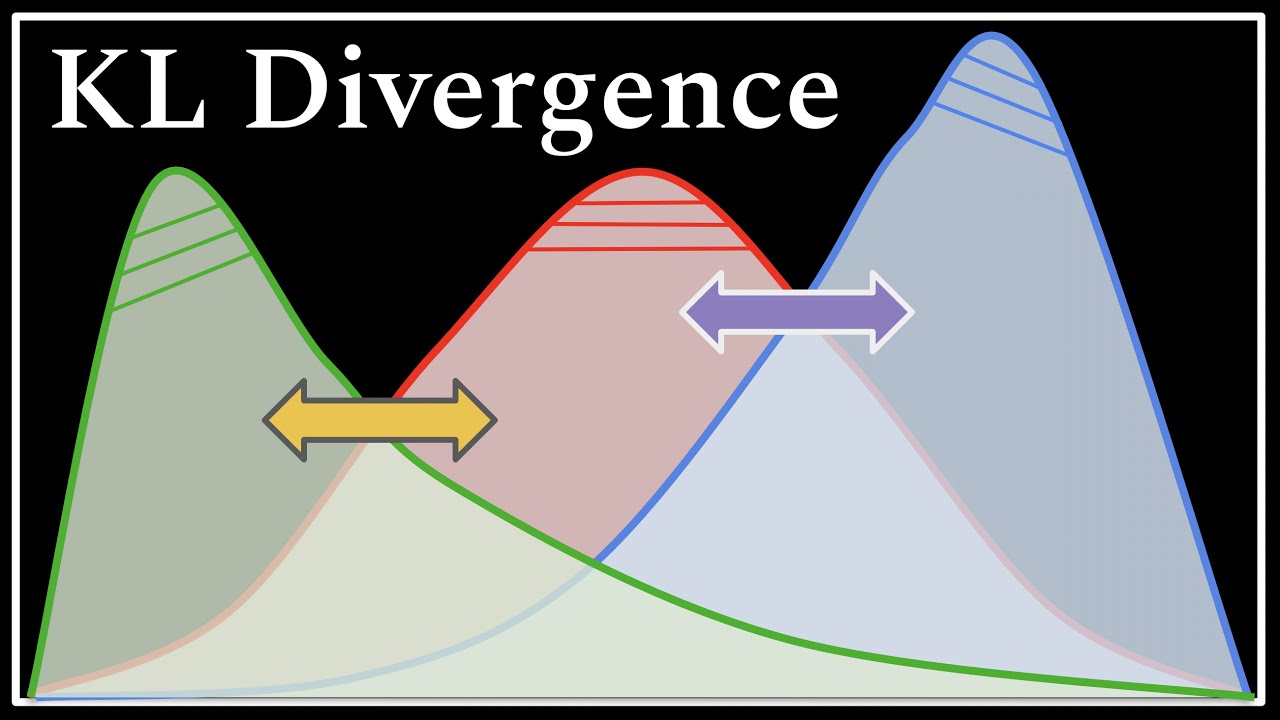

The KL Divergence : Data Science Basics

Показать описание

understanding how to measure the difference between two distributions

0:00 How to Learn Math

1:57 Motivation for P(x) / Q(x)

7:21 Motivation for Log

11:43 Motivation for Leading P(x)

15:59 Application to Data Science

0:00 How to Learn Math

1:57 Motivation for P(x) / Q(x)

7:21 Motivation for Log

11:43 Motivation for Leading P(x)

15:59 Application to Data Science

The KL Divergence : Data Science Basics

Intuitively Understanding the KL Divergence

KL Divergence #machinelearning #datascience #statistics #maths #deeplearning #probabilities

Data Science Moments - Kullback-Leibler Divergence

Kullback-Leibler (KL) Divergence in Machine Learning | Data Science

A Short Introduction to Entropy, Cross-Entropy and KL-Divergence

What is KL Divergence ?

Kullback-Leibler (KL) Divergence Mathematics Explained

KL Divergence - CLEARLY EXPLAINED!

NoteBook LLM- Detecting drifts in data streams using Kullback-Leibler (KL) divergence

Kullback Leibler Divergence - Georgia Tech - Machine Learning

Data Science #19 - The Kullback–Leibler divergence paper (1951)

Kullback-Leibler (KL) Divergence | Data Science Interview Questions | Machine Learning

The Key Equation Behind Probability

Approximating the KL-Divergence | Two Ways in TensorFlow Probability

20 - Properties of KL divergence

Introduction to KL-Divergence | Simple Example | with usage in TensorFlow Probability

Kullback-Leibler (KL) Divergence | Data Science Interview Questions | Machine Learning

Kullback-Leibler (KL) Divergence

#113 - KL Divergence

MaDL - Kullback-Leibler Divergence

KULLBACK-LEIBLER (KL) DIVERGENCE -- Math explained!

VI - 2.1 - KL Divergence (with R code)

KL Divergence - Intuition and Math Clearly Explained

Комментарии

0:18:14

0:18:14

0:05:13

0:05:13

0:00:45

0:00:45

0:04:01

0:04:01

0:03:42

0:03:42

0:10:41

0:10:41

0:02:39

0:02:39

0:03:21

0:03:21

0:11:35

0:11:35

0:22:23

0:22:23

0:01:31

0:01:31

0:52:42

0:52:42

0:01:00

0:01:00

0:26:24

0:26:24

0:08:58

0:08:58

0:09:44

0:09:44

0:15:29

0:15:29

0:00:59

0:00:59

0:05:09

0:05:09

0:06:20

0:06:20

0:11:54

0:11:54

0:09:09

0:09:09

0:07:56

0:07:56

0:10:52

0:10:52