filmov

tv

The Key Equation Behind Probability

Показать описание

Socials:

My name is Artem, I'm a graduate student at NYU Center for Neural Science and researcher at Flatiron Institute (Center for Computational Neuroscience).

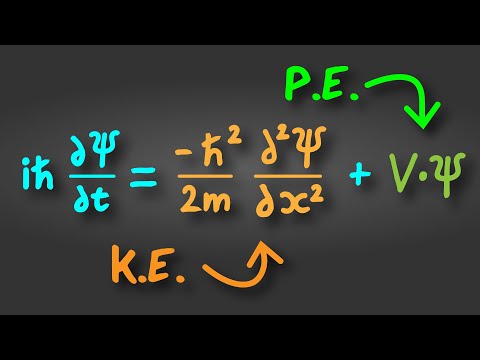

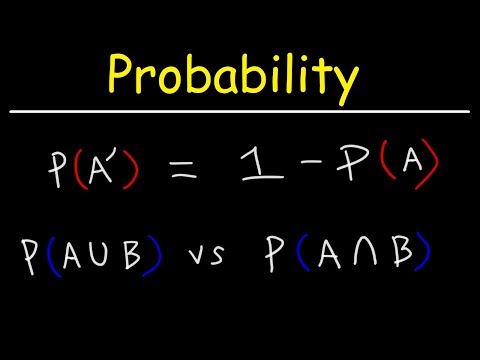

In this video, we explore the fundamental concepts that underlie probability theory and its applications in neuroscience and machine learning. We begin with the intuitive idea of surprise and its relation to probability, using real-world examples to illustrate these concepts.

From there, we move into more advanced topics:

1) Entropy – measuring the average surprise in a probability distribution.

2) Cross-entropy and the loss of information when approximating one distribution with another.

3) Kullback-Leibler (KL) divergence and its role in quantifying the difference between two probability distributions.

OUTLINE:

00:00 Introduction

02:00 Sponsor: NordVPN

04:07 What is probability (Bayesian vs Frequentist)

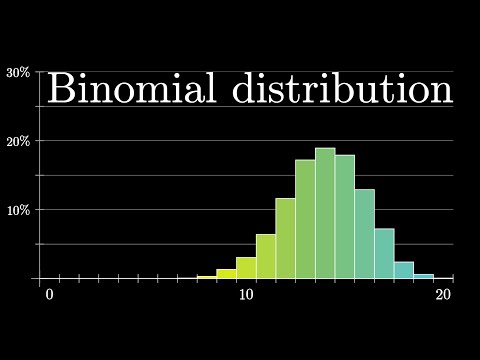

06:42 Probability Distributions

10:17 Entropy as average surprisal

13:53 Cross-Entropy and Internal models

19:20 Kullback–Leibler (KL) divergence

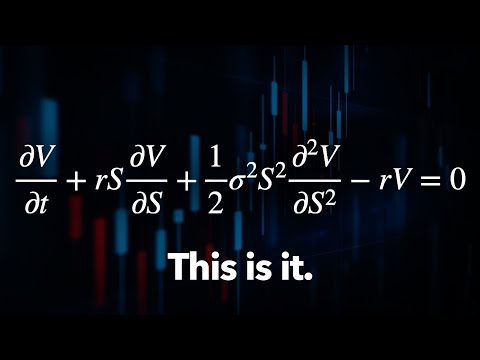

20:46 Objective functions and Cross-Entropy minimization

24:22 Conclusion & Outro

CREDITS:

Special thanks to Crimson Ghoul for providing English subtitles!

Комментарии

0:26:24

0:26:24

0:15:11

0:15:11

0:01:00

0:01:00

0:10:02

0:10:02

2:45:33

2:45:33

0:07:48

0:07:48

0:03:48

0:03:48

0:19:03

0:19:03

0:33:49

0:33:49

0:12:34

0:12:34

0:07:34

0:07:34

0:31:22

0:31:22

0:05:48

0:05:48

0:05:10

0:05:10

0:00:12

0:00:12

0:05:20

0:05:20

0:05:32

0:05:32

0:21:05

0:21:05

0:03:42

0:03:42

0:06:25

0:06:25

0:03:34

0:03:34

0:14:58

0:14:58

0:14:11

0:14:11

0:06:51

0:06:51