filmov

tv

lasso shrinkage regularization

Показать описание

lasso shrinkage regularization: an informative tutorial

overview

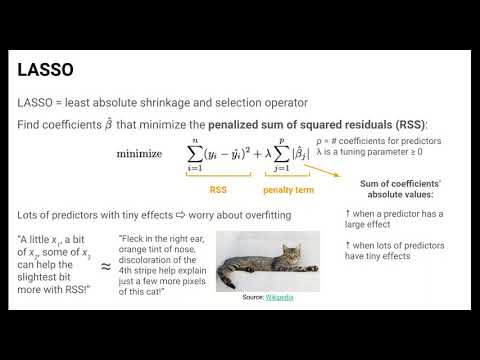

lasso (least absolute shrinkage and selection operator) is a regression analysis method that performs both variable selection and regularization to enhance the prediction accuracy and interpretability of the statistical model it produces. lasso adds a penalty equal to the absolute value of the magnitude of coefficients, which helps in shrinking some coefficients to zero, effectively performing variable selection.

key concepts

1. **regularization**: it is a technique used to prevent overfitting by adding a penalty to the loss function.

2. **l1 regularization**: lasso uses l1 regularization, which adds the absolute value of the coefficients as a penalty term to the loss function.

3. **loss function**: the loss function for lasso regression is defined as:

\[

l(\beta) = \sum_{i=1}^{n} (y_i - x_i\beta)^2 + \lambda \sum_{j=1}^{p} |\beta_j|

\]

where:

- \(y_i\) is the target variable

- \(x_i\) is the feature matrix

- \(\beta\) is the vector of coefficients

- \(\lambda\) is the regularization parameter

when to use lasso

- when you have a large number of features and expect that many of them are irrelevant.

- when you want a simpler and more interpretable model.

- when you need to prevent overfitting in high-dimensional datasets.

implementation

in this tutorial, we will use python's `scikit-learn` library to implement lasso regression. we will use a synthetic dataset for demonstration.

step 1: import libraries

step 2: create a synthetic dataset

step 3: fit lasso regression model

step 4: evaluate the model

step 5: visualize the results

conclusion

lasso regression is a powerful technique for regression analysis, especially when dealing with high-dimensional data. by applying l1 regularization, lasso not only improves model performance but also aids in feature selection.

further reading

#Lasso #ShrinkageRegularization #deeplearning

Lasso regularization

shrinkage

feature selection

linear regression

regularization techniques

overfitting prevention

model complexity

penalty term

coefficient shrinkage

sparsity

high-dimensional data

optimization

machine learning

statistical modeling

algorithm efficiency

overview

lasso (least absolute shrinkage and selection operator) is a regression analysis method that performs both variable selection and regularization to enhance the prediction accuracy and interpretability of the statistical model it produces. lasso adds a penalty equal to the absolute value of the magnitude of coefficients, which helps in shrinking some coefficients to zero, effectively performing variable selection.

key concepts

1. **regularization**: it is a technique used to prevent overfitting by adding a penalty to the loss function.

2. **l1 regularization**: lasso uses l1 regularization, which adds the absolute value of the coefficients as a penalty term to the loss function.

3. **loss function**: the loss function for lasso regression is defined as:

\[

l(\beta) = \sum_{i=1}^{n} (y_i - x_i\beta)^2 + \lambda \sum_{j=1}^{p} |\beta_j|

\]

where:

- \(y_i\) is the target variable

- \(x_i\) is the feature matrix

- \(\beta\) is the vector of coefficients

- \(\lambda\) is the regularization parameter

when to use lasso

- when you have a large number of features and expect that many of them are irrelevant.

- when you want a simpler and more interpretable model.

- when you need to prevent overfitting in high-dimensional datasets.

implementation

in this tutorial, we will use python's `scikit-learn` library to implement lasso regression. we will use a synthetic dataset for demonstration.

step 1: import libraries

step 2: create a synthetic dataset

step 3: fit lasso regression model

step 4: evaluate the model

step 5: visualize the results

conclusion

lasso regression is a powerful technique for regression analysis, especially when dealing with high-dimensional data. by applying l1 regularization, lasso not only improves model performance but also aids in feature selection.

further reading

#Lasso #ShrinkageRegularization #deeplearning

Lasso regularization

shrinkage

feature selection

linear regression

regularization techniques

overfitting prevention

model complexity

penalty term

coefficient shrinkage

sparsity

high-dimensional data

optimization

machine learning

statistical modeling

algorithm efficiency

0:13:33

0:13:33

0:08:19

0:08:19

0:20:27

0:20:27

0:09:45

0:09:45

0:09:06

0:09:06

0:20:17

0:20:17

0:27:58

0:27:58

0:13:33

0:13:33

0:19:21

0:19:21

0:15:22

0:15:22

0:00:57

0:00:57

0:03:43

0:03:43

0:21:35

0:21:35

0:12:06

0:12:06

0:04:02

0:04:02

0:05:19

0:05:19

0:08:11

0:08:11

0:00:59

0:00:59

0:18:35

0:18:35

0:02:22

0:02:22

0:01:46

0:01:46

0:16:00

0:16:00

0:19:59

0:19:59

0:04:41

0:04:41