filmov

tv

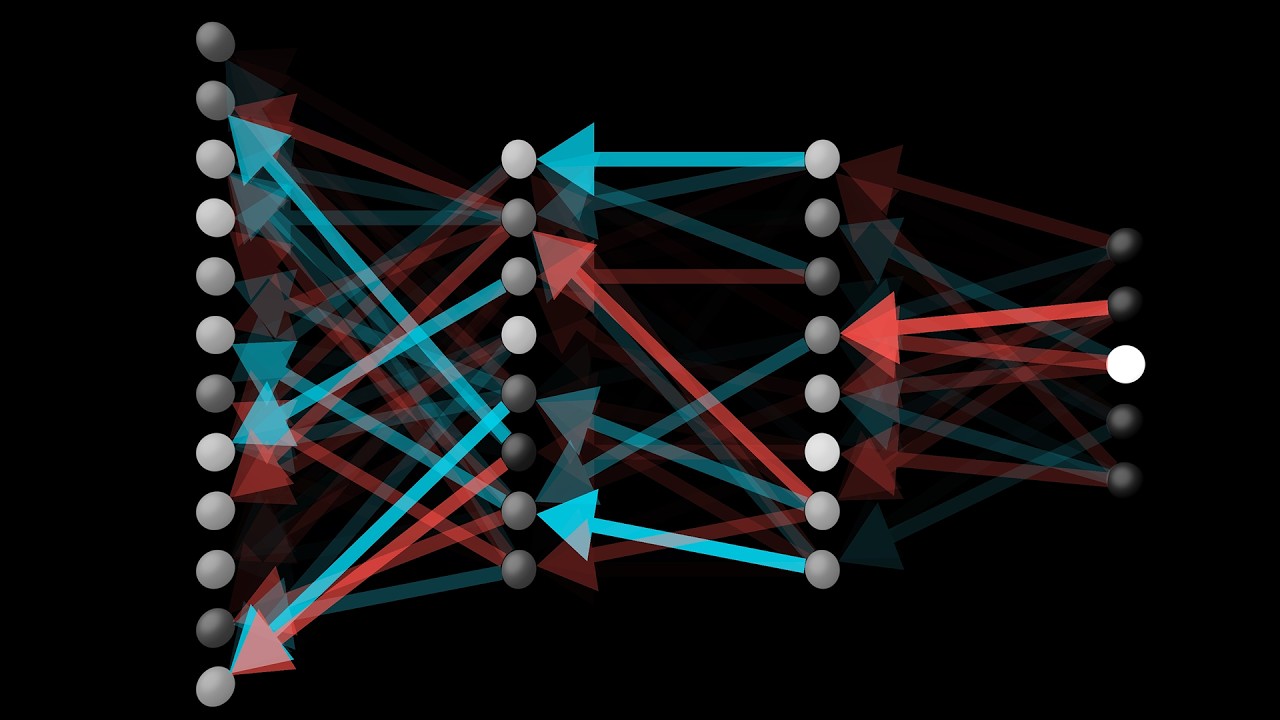

What is backpropagation really doing? | Chapter 3, Deep learning

Показать описание

What's actually happening to a neural network as it learns?

An equally valuable form of support is to simply share some of the videos.

The following video is sort of an appendix to this one. The main goal with the follow-on video is to show the connection between the visual walkthrough here, and the representation of these "nudges" in terms of partial derivatives that you will find when reading about backpropagation in other resources, like Michael Nielsen's book or Chis Olah's blog.

Video timeline:

0:00 - Introduction

0:23 - Recap

3:07 - Intuitive walkthrough example

9:33 - Stochastic gradient descent

12:28 - Final words

Thanks to these viewers for their contributions to translations

Italian: @teobucci

Vietnamese: CyanGuy111

An equally valuable form of support is to simply share some of the videos.

The following video is sort of an appendix to this one. The main goal with the follow-on video is to show the connection between the visual walkthrough here, and the representation of these "nudges" in terms of partial derivatives that you will find when reading about backpropagation in other resources, like Michael Nielsen's book or Chis Olah's blog.

Video timeline:

0:00 - Introduction

0:23 - Recap

3:07 - Intuitive walkthrough example

9:33 - Stochastic gradient descent

12:28 - Final words

Thanks to these viewers for their contributions to translations

Italian: @teobucci

Vietnamese: CyanGuy111

What is backpropagation really doing? | Chapter 3, Deep learning

What is Back Propagation

Backpropagation in Neural Networks | Back Propagation Algorithm with Examples | Simplilearn

Neural Networks Pt. 2: Backpropagation Main Ideas

Backpropagation calculus | Chapter 4, Deep learning

ARTIFICIAL INTELLIGENCE: What is backpropagation really doing? | Chapter 3, deep learning

#2 BACKPROPAGATION algorithm. How a neural network learn ? A step by step demonstration.

But what is a neural network? | Chapter 1, Deep learning

Day 8 (25 July 2024): Deep Learning with MATLAB

Gradient descent, how neural networks learn | Chapter 2, Deep learning

Neural Network In 5 Minutes | What Is A Neural Network? | How Neural Networks Work | Simplilearn

Tutorial 31- Back Propagation In Recurrent Neural Network

What is backpropagation really doing?

Neural Networks explained in 60 seconds!

Lecture 6: Backpropagation

Neural Networks Demystified [Part 4: Backpropagation]

Why Neural Networks can learn (almost) anything

What is backpropagation really doing? | Solved Example Back Propagation Algorithm | Part 2

What is backpropagation really doing? | Solved Example Back Propagation Algorithm | Part 1

Backpropagation And Gradient Descent In Neural Networks | Neural Network Tutorial | Simplilearn

#1 Solved Example Back Propagation Algorithm Multi-Layer Perceptron Network by Dr. Mahesh Huddar

What is backpropagation really doing? | Solved Example Back Propagation Algorithm | Part 4

What is backpropagation really doing? | Solved Example Back Propagation Algorithm | Part 5

What is backpropagation really doing? | Solved Example Back Propagation Algorithm | Part 3

Комментарии

0:12:47

0:12:47

0:08:00

0:08:00

0:06:48

0:06:48

0:17:34

0:17:34

0:10:18

0:10:18

0:13:04

0:13:04

0:12:44

0:12:44

0:18:40

0:18:40

2:13:09

2:13:09

0:20:33

0:20:33

0:05:45

0:05:45

0:07:30

0:07:30

0:09:02

0:09:02

0:01:00

0:01:00

1:11:16

1:11:16

0:07:56

0:07:56

0:10:30

0:10:30

0:06:41

0:06:41

0:11:53

0:11:53

0:12:39

0:12:39

0:14:31

0:14:31

0:08:42

0:08:42

0:13:53

0:13:53

0:06:16

0:06:16