filmov

tv

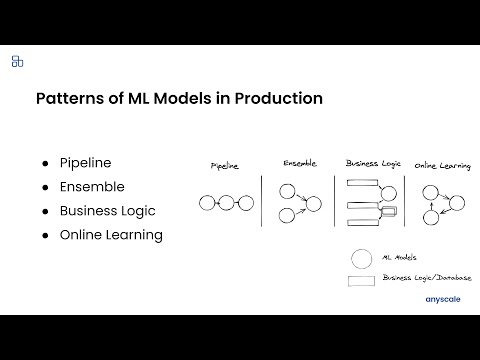

Ray Serve: Patterns of ML Models in Production

Показать описание

(Simon Mo, Anyscale)

You trained a ML model, now what? The model needs to be deployed for online serving and offline processing. This talk walks through the journey of deploying your ML models in production. I will cover common deployment patterns backed by concrete use cases which are drawn from 100+ user interviews for Ray and Ray Serve. Lastly, I will cover how we built Ray Serve, a scalable model serving framework, from these learnings.

You trained a ML model, now what? The model needs to be deployed for online serving and offline processing. This talk walks through the journey of deploying your ML models in production. I will cover common deployment patterns backed by concrete use cases which are drawn from 100+ user interviews for Ray and Ray Serve. Lastly, I will cover how we built Ray Serve, a scalable model serving framework, from these learnings.

Ray Serve: Patterns of ML Models in Production

Introducing Ray Serve: Scalable and Programmable ML Serving Framework - Simon Mo, Anyscale

Deploying Many Models Efficiently with Ray Serve

TALK / Simon Mo / Patterns of ML Models in Production

Ray Serve: Tutorial for Building Real Time Inference Pipelines

Building Production AI Applications with Ray Serve

Ray: A Framework for Scaling and Distributing Python & ML Applications

Seamlessly Scaling your ML Pipelines with Ray Serve - Archit Kulkarni

Enabling Cost-Efficient LLM Serving with Ray Serve

Productionizing ML at scale with Ray Serve

Introduction to Model Deployment with Ray Serve

State of Ray Serve in 2.0

Advanced Model Serving Techniques with Ray on Kubernetes - Andrew Sy Kim & Kai-Hsun Chen

Multi-model composition with Ray Serve deployment graphs

Faster Model Serving with Ray and Anyscale | Ray Summit 2024

Leveraging the Possibilities of Ray Serve

Scaling AI & Machine Learning Workloads With Ray on AWS, Kubernetes, & BERT

Scalable machine learning workloads with Ray AI Runtime

KubeRay: A Ray cluster management solution on Kubernetes

Ray (Episode 4): Deploying 7B GPT using Ray

Keynote: The Future of Ray - Robert Nishihara, Anyscale

Scaling AI Workloads with the Ray Ecosystem

Ray Serve for IOT at Samsara

Highly available architectures for online serving in Ray

Комментарии

0:25:12

0:25:12

0:23:03

0:23:03

0:25:42

0:25:42

0:26:19

0:26:19

0:32:34

0:32:34

0:30:08

0:30:08

1:10:43

1:10:43

0:41:59

0:41:59

0:30:28

0:30:28

1:49:44

1:49:44

1:10:41

1:10:41

0:31:33

0:31:33

0:41:35

0:41:35

0:30:14

0:30:14

0:30:30

0:30:30

0:28:37

0:28:37

0:27:45

0:27:45

3:20:32

3:20:32

0:25:00

0:25:00

0:25:34

0:25:34

0:13:32

0:13:32

0:37:03

0:37:03

0:11:10

0:11:10

0:34:26

0:34:26