filmov

tv

Give Internet Access to your Local LLM (Python, Ollama, Local LLM)

Показать описание

Give your local LLM model internet access using Python. The LLM will be able to use the internet to find information relevant to the user's questions.

Library Used:

Library Used:

Give Internet Access to your Local LLM (Python, Ollama, Local LLM)

How To Fix WiFi Connected But No Internet Access On Windows 10 - 5 Ways

WiFi Connected but no Internet Access Fix | Android | 2024

Fix Ethernet Connected But No Internet Access | LAN Wired

WiFi Connected But No Internet Access on Windows 11/10? Here's How to Fix It!

WiFi Connected But No Internet Access on Windows 11 Fix

How to block internet access for specific apps in windows 11

Wifi Connected But No Internet Access Android | Wifi Connected But Not Working | Wifi not Access fix

NAT Failover and BGP Peering Between Edge Router and ISP Routers | Company's Network Configurat...

No internet your device can only access other devices on your local network windows 11

How To Give ChatGPT Internet Access And Improve Responses (2023)

How To Give Internet Access To Lambda In VPC (3 Min) | AWS

Fix Ethernet Connected But No Internet Access | LAN Wired

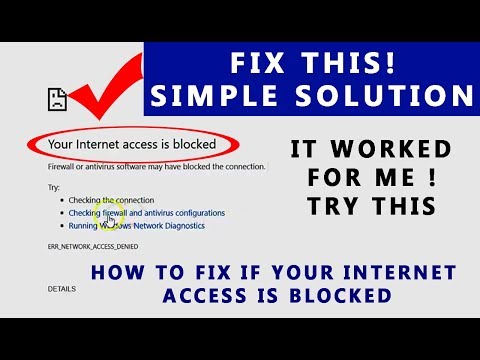

Fix - Your Internet access is blocked error | How to fix | Solution

iOS 15: Wifi Not Working on iPhone! [No Internet Connection Fixed]

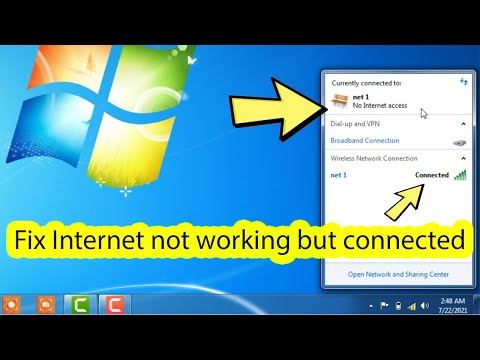

Fix windows 7 no internet access but connected ethernet

mobile data on but no internet fix | connected but no internet access fix

Part #2: Give Your EVE-NG Lab Internet Access

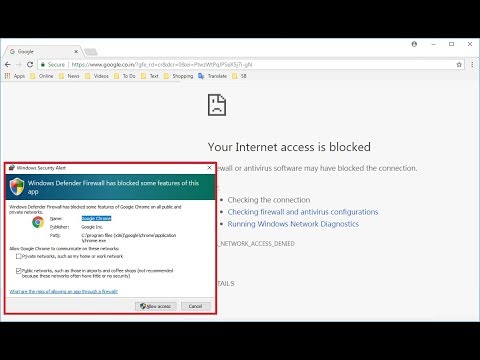

How to Fix Your Internet Access is Blocked, Windows Firewall has Blocked (Easy)

iPad Wifi Connected But No Internet Access || Solved.

How to fix windows 10 Hotspot not obtaining ip address | Hotspot not sharing internet

Mobile data on but no internet fix | connected but no internet access fix

internet connected but browser not working windows 10 || LAN showing internet access but not working

Cisco Packet Tracer Tutorial # 3 | Internet access with the network we built

Комментарии

0:05:32

0:05:32

0:08:44

0:08:44

0:03:09

0:03:09

0:05:02

0:05:02

0:02:17

0:02:17

0:03:30

0:03:30

0:02:20

0:02:20

0:01:55

0:01:55

0:47:47

0:47:47

0:02:50

0:02:50

0:02:11

0:02:11

0:03:45

0:03:45

0:03:33

0:03:33

0:02:42

0:02:42

0:04:29

0:04:29

0:03:16

0:03:16

0:04:55

0:04:55

0:11:29

0:11:29

0:02:28

0:02:28

0:00:39

0:00:39

0:01:56

0:01:56

0:03:48

0:03:48

0:02:54

0:02:54

0:05:12

0:05:12