filmov

tv

Classify Images with a Vision Transformer (ViT): PyTorch Deep Learning Tutorial

Показать описание

TIMESTAMPS

00:00 Introduction

00:28 Overview of Vision Transformers

00:43 Reference to "An Image is Worth 16x16 Words" Paper

01:50 Comparison with CNNs

03:00 Explanation of Transformer Blocks

04:41 Network Implementation

05:18 Forward Pass

07:43 Model Instantiation

08:19 Training Process

08:52 Training Results

09:12 Significance of Vision Transformers

09:31 Visualization of Positional Embeddings

10:30 Future Directions and Conclusion

In this Pytorch Tutorial video I introduce the Vision Transformer model! By simply splitting our image into patches we can use Encoder-Only Transformers to perform image classification!

An Image is Worth 16x16 words:

Donations, Help Support this work!

The corresponding code is available here! ( Section 14)

Discord Server:

00:00 Introduction

00:28 Overview of Vision Transformers

00:43 Reference to "An Image is Worth 16x16 Words" Paper

01:50 Comparison with CNNs

03:00 Explanation of Transformer Blocks

04:41 Network Implementation

05:18 Forward Pass

07:43 Model Instantiation

08:19 Training Process

08:52 Training Results

09:12 Significance of Vision Transformers

09:31 Visualization of Positional Embeddings

10:30 Future Directions and Conclusion

In this Pytorch Tutorial video I introduce the Vision Transformer model! By simply splitting our image into patches we can use Encoder-Only Transformers to perform image classification!

An Image is Worth 16x16 words:

Donations, Help Support this work!

The corresponding code is available here! ( Section 14)

Discord Server:

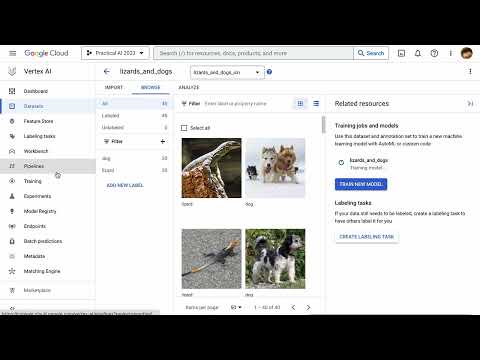

How to classify images with Vertex AI

Image Classification Using Vision Transformer | ViTs

Image classification vs Object detection vs Image Segmentation | Deep Learning Tutorial 28

Vision Transformer for Image Classification

Image classification with Python and Scikit learn | Computer vision tutorial

Image Classification Project in Python | Deep Learning Neural Network Model Project in Python

Image recognition and classification with Cloud Vision

Computer Vision | Image Classification, Image Localization, Image Segmentation, Object Detection

'New Update in Computer Vision | Latest Features Explained!

Lecture 2: Image Classification

Build a Deep CNN Image Classifier with ANY Images

Lecture 2 | Image Classification

Classify Images with a Vision Transformer (ViT): PyTorch Deep Learning Tutorial

IMAGE CLASSIFICATION with Yolov8 custom dataset | Computer vision tutorial

Image classification + feature extraction with Python and Scikit learn | Computer vision tutorial

What is Image Classification?

Vision Transformer for Image Classification Using transfer learning

Image classification with Python FULL COURSE | Computer vision

Practical AI 012a: Training an Image Classification Model with Vertex AI

Image Classification Computer Vision with Hugging Face Transformers -Google ViT - Python ML Tutorial

Image Classification Using Pytorch and Convolutional Neural Network

New TECH: Vision Transformer 2023 on Image Classification | AI

Attention Mechanism in CNN - Vision Transfomer model -Image classification -Own data

Simple explanation of convolutional neural network | Deep Learning Tutorial 23 (Tensorflow & Pyt...

Комментарии

0:01:20

0:01:20

0:34:13

0:34:13

0:02:32

0:02:32

0:14:47

0:14:47

0:32:28

0:32:28

0:54:00

0:54:00

0:02:28

0:02:28

0:00:48

0:00:48

0:17:46

0:17:46

1:02:15

1:02:15

1:25:05

1:25:05

0:59:32

0:59:32

0:10:54

0:10:54

0:45:08

0:45:08

0:22:00

0:22:00

0:06:13

0:06:13

0:10:41

0:10:41

3:46:20

3:46:20

0:09:57

0:09:57

0:13:21

0:13:21

0:21:29

0:21:29

0:21:04

0:21:04

0:05:18

0:05:18

0:23:54

0:23:54