filmov

tv

Spark memory allocation and reading large files| Spark Interview Questions

Показать описание

Hi Friends,

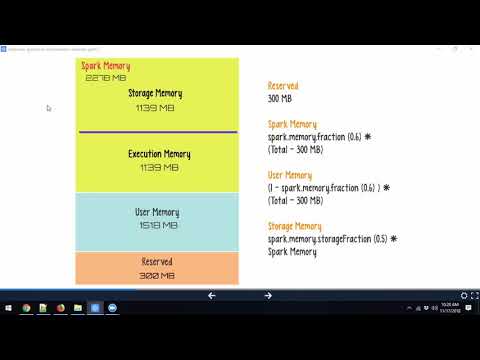

In this video, I have explained the Spark memory allocation and how a 1 tb file will be processed by Spark.

Please subscribe to my channel for more interesting learnings.

In this video, I have explained the Spark memory allocation and how a 1 tb file will be processed by Spark.

Please subscribe to my channel for more interesting learnings.

Spark memory allocation and reading large files| Spark Interview Questions

Spark Executor Core & Memory Explained

Processing 25GB of data in Spark | How many Executors and how much Memory per Executor is required.

04. On-Heap vs Off-Heap| Databricks | Spark | Interview Question | Performance Tuning

Spark [Executor & Driver] Memory Calculation

95% reduction in Apache Spark processing time with correct usage of repartition() function

Apache Spark Memory Management | Unified Memory Management

spark out of memory exception

Spark Memory Allocation | Spark Performance Tuning

Spark Memory Management | Memory calculation | spark Memory tuning | spark performance optimization

Spark Interview Question | How many CPU Cores | How many executors | How much executor memory

Spark Out of Memory Issue | Spark Memory Tuning | Spark Memory Management | Part 1

Out Of Memory - OOM Issue in Apache Spark | Spark Memory Management | Spark Interview Questions

Spark Executor Memory Calculation | Number of Executors | Executor Cores | Spark Interview Q&A

Deep Dive: Apache Spark Memory Management

Spark Memory Management

22 - Spark Web UI - Executors tab

spark OutOfMemory( OOM) error

Most common filesystems used by apache Spark

Data engineer interview question | Process 100 GB of data in Spark Spark | Number of Executors

Spark Memory Management | How to calculate the cluster Memory in Spark

Spark Executor & Driver Memory Calculation | Dynamic Allocation | Interview Question

Spark Heap Memory, Off-heap memory #trending #programming #sparklovers

Spark Parallel and in-memory processing.

Комментарии

0:08:53

0:08:53

0:08:32

0:08:32

0:14:20

0:14:20

0:11:56

0:11:56

0:06:50

0:06:50

0:04:47

0:04:47

0:07:18

0:07:18

0:01:00

0:01:00

0:07:02

0:07:02

0:24:36

0:24:36

0:05:58

0:05:58

0:07:38

0:07:38

0:08:12

0:08:12

0:05:39

0:05:39

0:26:13

0:26:13

0:12:33

0:12:33

0:05:26

0:05:26

0:12:05

0:12:05

0:01:47

0:01:47

0:11:44

0:11:44

0:18:52

0:18:52

0:08:37

0:08:37

0:01:00

0:01:00

0:07:55

0:07:55