filmov

tv

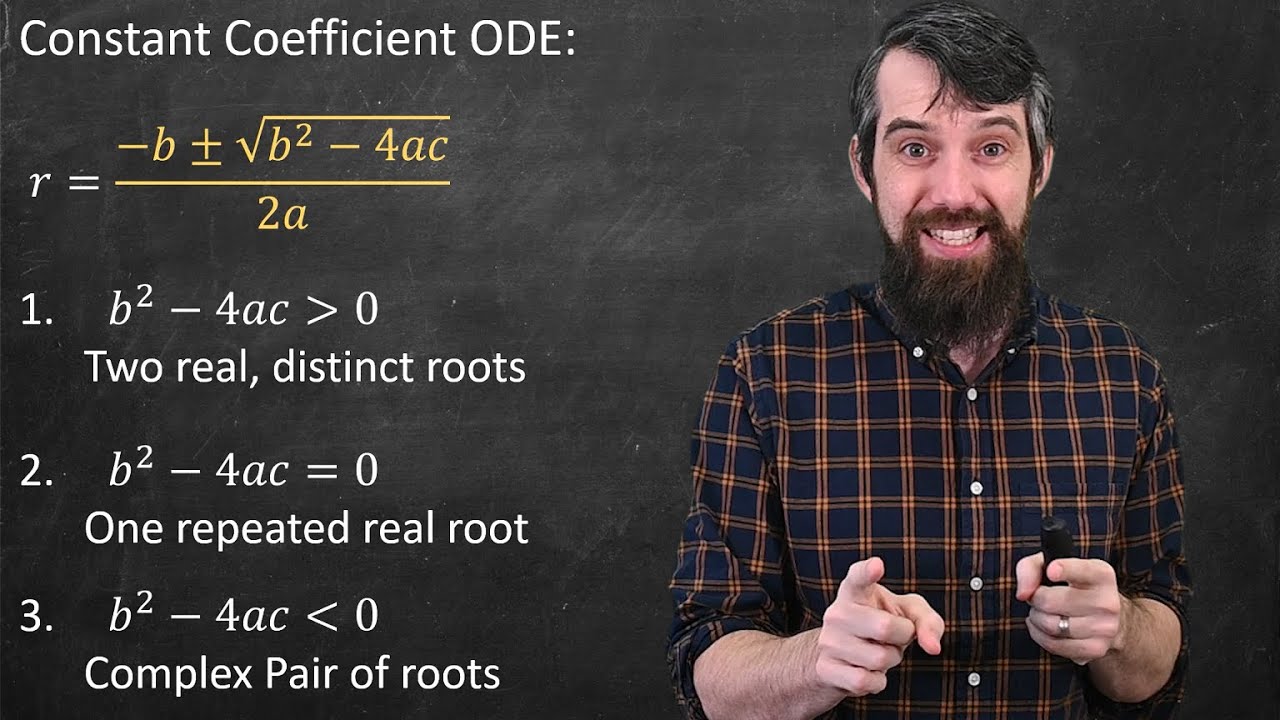

Constant Coefficient ODEs: Real & Distinct vs Real & Repeated vs Complex Pair

Показать описание

While we saw a specific example of Constant Coefficient Homogeneous ODEs in my previous video in the playlist, in this video we're going to solve it in generality and really elaborate on the three main cases. The characteristic equation aka auxiliary equation can have either real and distinct root, real and repeated roots, or complex conjugates. Each of these three cases has a specific form of the solution to the differential equation.

0:00 Characteristic Equation

1:50 Three Cases

4:00 Real Distinct Roots

4:24 Real Repeated Roots

6:19 Complex Roots

OTHER COURSE PLAYLISTS:

OTHER PLAYLISTS:

► Learning Math Series

►Cool Math Series:

BECOME A MEMBER:

MATH BOOKS & MERCH I LOVE:

SOCIALS:

How to Solve Constant Coefficient Homogeneous Differential Equations

Constant Coefficient ODEs: Real & Distinct vs Real & Repeated vs Complex Pair

Constant Coefficient ODEs: Example 1 with Real and Complex Roots

Constant Coefficient ODEs with Distinct Real Roots

Constant Coefficient ODEs: Example 1 with Distinct Real Roots

Constant Coefficient ODEs: Example 1 with Repeated Real Roots

Constant Coefficient ODEs Example 2 with Distinct Real Roots

Second order homogeneous linear differential equations with constant coefficients

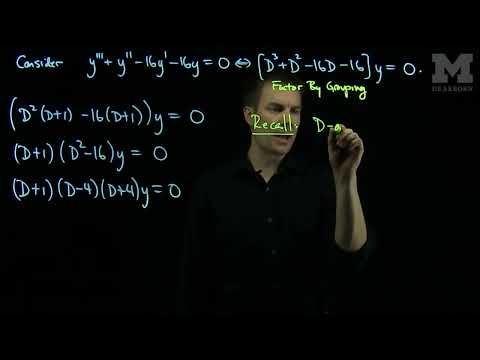

Higher Order Constant Coefficient Differential Equations: y'''+y'=0 and y'&...

Homogeneous, second-order, constant coefficient ode with two real roots

Linear ODE with Constant Coefficients: Complex Roots with Nonzero Real Parts

Second Order Linear Differential Equations

Second-order, linear, homogeneous odes with constant coefficients (Part II: real, distinct roots)

Constant Coefficient ODEs: Example 1 with Distinct Complex Roots

Differential Equations | Homogeneous 2nd Order ODEs with constant coefficients| Distinct real roots

Undetermined Coefficients: Solving non-homogeneous ODEs

Constant Coefficient ODEs: Example 1 with Repeated Complex Roots

Differential Equations - 24 - 2nd Order - Complex Roots (r=a+bi)

2nd Order ODEs - Constant Coefficient | Definition & Worked examples: Real, Repeated & Compl...

Constant Coefficient ODEs with the Rational Root Theorem: Example 1

Constant Coefficient ODEs: Example 2 with Repeated Real Roots

Second Order Linear Constant Coefficient ODEs Part 1

Linear ODEs with Constant Coefficients: Repeated Roots

Homogeneous, second-order, constant-coefficient ode with complex roots

Комментарии

0:06:41

0:06:41

0:11:50

0:11:50

0:04:22

0:04:22

0:07:49

0:07:49

0:05:02

0:05:02

0:06:05

0:06:05

0:02:59

0:02:59

0:11:44

0:11:44

0:11:19

0:11:19

0:05:35

0:05:35

0:07:39

0:07:39

0:25:17

0:25:17

0:05:06

0:05:06

0:03:02

0:03:02

0:05:41

0:05:41

0:12:44

0:12:44

0:04:17

0:04:17

0:09:49

0:09:49

0:06:46

0:06:46

0:07:21

0:07:21

0:04:39

0:04:39

0:45:11

0:45:11

0:08:03

0:08:03

0:05:44

0:05:44