filmov

tv

Scaling Laws for Natural Language Models

Показать описание

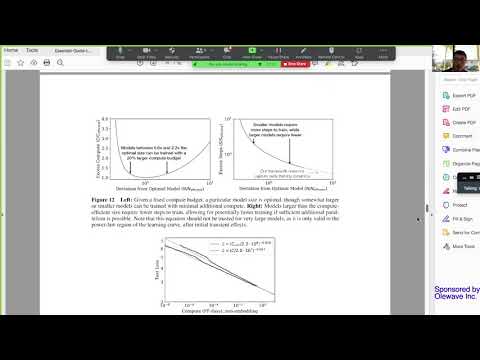

When you create a language model there are a number of competing factors you can set. This week Roger walked through a paper that presented observations on the effects of different factors in getting good model performance. The paper compares data Scale versus Shape, Batch size, and other factors. In the end it presents a summary of the Scaling Laws for parameters.

*Links*

*Content*

00:00 Introduction

07:30 Model parameters

36:12 Scale vs. Shape

20:51 Batch size

55:56 Scaling law summary

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

😊About Us

West Coast Machine Learning is a channel dedicated to exploring the exciting world of machine learning! Our group of techies is passionate about deep learning, neural networks, computer vision, tiny ML, and other cool geeky machine learning topics. We love to dive deep into the technical details and stay up to date with the latest research developments.

Our Meetup group and YouTube channel is the perfect place to connect with other like-minded individuals who share your love of machine learning. We offer a mix of research paper discussions, coding reviews, and other data science topics. So, if you're looking to stay up to date with the latest developments in machine learning, connect with other techies, and learn something new, be sure to subscribe to our channel and join our Meetup community today!

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#AI #ML #LLMs #MachineLearning #InferenceOptimization #ModelPerformance #Transformers #GenerativeAI

*Links*

*Content*

00:00 Introduction

07:30 Model parameters

36:12 Scale vs. Shape

20:51 Batch size

55:56 Scaling law summary

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

😊About Us

West Coast Machine Learning is a channel dedicated to exploring the exciting world of machine learning! Our group of techies is passionate about deep learning, neural networks, computer vision, tiny ML, and other cool geeky machine learning topics. We love to dive deep into the technical details and stay up to date with the latest research developments.

Our Meetup group and YouTube channel is the perfect place to connect with other like-minded individuals who share your love of machine learning. We offer a mix of research paper discussions, coding reviews, and other data science topics. So, if you're looking to stay up to date with the latest developments in machine learning, connect with other techies, and learn something new, be sure to subscribe to our channel and join our Meetup community today!

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#AI #ML #LLMs #MachineLearning #InferenceOptimization #ModelPerformance #Transformers #GenerativeAI

Комментарии

1:23:20

1:23:20

0:02:07

0:02:07

0:09:43

0:09:43

0:12:15

0:12:15

0:24:07

0:24:07

0:13:16

0:13:16

1:21:03

1:21:03

1:20:24

1:20:24

0:55:12

0:55:12

0:09:27

0:09:27

0:35:14

0:35:14

0:16:23

0:16:23

0:24:07

0:24:07

![[Audio notes] Scaling](https://i.ytimg.com/vi/DvtfvtK6v5Q/hqdefault.jpg) 0:36:00

0:36:00

0:48:38

0:48:38

0:01:15

0:01:15

![[1hr Talk] Intro](https://i.ytimg.com/vi/zjkBMFhNj_g/hqdefault.jpg) 0:59:48

0:59:48

0:04:52

0:04:52

1:10:40

1:10:40

0:00:41

0:00:41

1:35:50

1:35:50

0:23:58

0:23:58

0:42:00

0:42:00

0:36:06

0:36:06