filmov

tv

Beyond neural scaling laws – Paper Explained

Показать описание

„Beyond neural scaling laws: beating power law scaling via data pruning” paper explained with animations. You do not need to train your neural network on the entire dataset!

ERRATUM: See pinned comment for what easy/hard examples are chosen.

Outline:

00:00 Stable Diffusion is a Latent Diffusion Model

01:43 NVIDIA (sponsor): Register for the GTC!

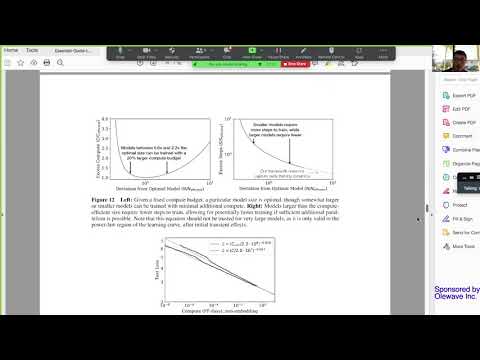

03:00 What are neural scaling laws? Power laws explained.

05:15 Exponential scaling in theory

07:40 What the theory predicts

09:50 Unsupervised data pruning with foundation models

Thanks to our Patrons who support us in Tier 2, 3, 4: 🙏

Don Rosenthal, Dres. Trost GbR, Julián Salazar, Edvard Grødem, Vignesh Valliappan, Mutual Information, Mike Ton

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔥 Optionally, pay us a coffee to help with our Coffee Bean production! ☕

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔗 Links:

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

Video editing: Nils Trost

ERRATUM: See pinned comment for what easy/hard examples are chosen.

Outline:

00:00 Stable Diffusion is a Latent Diffusion Model

01:43 NVIDIA (sponsor): Register for the GTC!

03:00 What are neural scaling laws? Power laws explained.

05:15 Exponential scaling in theory

07:40 What the theory predicts

09:50 Unsupervised data pruning with foundation models

Thanks to our Patrons who support us in Tier 2, 3, 4: 🙏

Don Rosenthal, Dres. Trost GbR, Julián Salazar, Edvard Grødem, Vignesh Valliappan, Mutual Information, Mike Ton

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔥 Optionally, pay us a coffee to help with our Coffee Bean production! ☕

▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀▀

🔗 Links:

#AICoffeeBreak #MsCoffeeBean #MachineLearning #AI #research

Video editing: Nils Trost

Beyond neural scaling laws – Paper Explained

10 minutes paper (episode 22); Beyond neural scaling laws

Using Scaling Laws for Smaller, but still Accurate Models

AI can't cross this line and we don't know why.

Understanding the Origins and Taxonomy of Neural Scaling Laws

Neural Scaling Laws

Neural Scaling Laws: how much more data we need?

Studying Scaling Laws for Transformer Architecture … | Shola Oyedele | OpenAI Scholars Demo Day 2021...

Stanford CS224N NLP with Deep Learning | Spring 2022 | Guest Lecture: Scaling Language Models

Explaining Neural Scaling Laws

Neural Scaling Laws and GPT-3

Scaling Laws for Large Language Models

Neural network architectures, scaling laws and transformers

Scaling laws for large language models

Architectures Beyond CNNs and Visual Scaling Laws (Neil Houlsby) | Tutorial (1/3)

Scaling Laws for Neural Language Models

Alex Wadell: Neural Scaling Laws - Fitting Scaling Laws for SciFMs (Tutorial 3)

Neural Scaling Laws and GPT-3 - Jared Kaplan

Eric Michaud—Scaling, Grokking, Quantum Interpretability

Adam Grzywaczewski | The scaling laws of AI Why neural networks continue to grow

Lecture 7: Explaining Neural Scaling Laws

WHY AND HOW OF SCALING LARGE LANGUAGE MODELS | NICHOLAS JOSEPH

Finding scaling laws for Reinforcement Learning

Exploring Neural Scaling Law and Data Pruning Methods For Node Classification on Large scale Graphs

Комментарии

0:13:16

0:13:16

0:29:43

0:29:43

0:01:15

0:01:15

0:24:07

0:24:07

1:05:24

1:05:24

0:42:00

0:42:00

0:09:27

0:09:27

0:16:23

0:16:23

1:14:49

1:14:49

1:17:52

1:17:52

1:35:50

1:35:50

0:02:07

0:02:07

0:35:14

0:35:14

1:20:24

1:20:24

0:30:44

0:30:44

0:24:07

0:24:07

1:04:37

1:04:37

1:15:21

1:15:21

0:48:23

0:48:23

0:26:00

0:26:00

0:56:13

0:56:13

0:09:43

0:09:43

0:35:24

0:35:24

0:14:08

0:14:08