filmov

tv

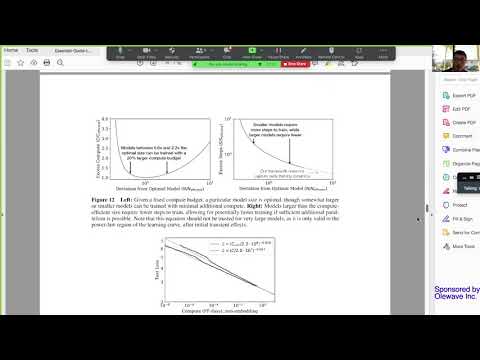

Scaling laws for large language models

Показать описание

The lecture presents the idea of scaling laws determining the relationship between model size (number of parameters), training dataset size (number of tokens), and the amount of computing available for training. At the end I am also introducing one of the weirdest phenomena in language model training - grokking

0:09:43

0:09:43

1:14:49

1:14:49

0:02:07

0:02:07

0:00:59

0:00:59

0:01:15

0:01:15

0:24:07

0:24:07

0:13:16

0:13:16

0:38:21

0:38:21

1:23:20

1:23:20

![[1hr Talk] Intro](https://i.ytimg.com/vi/zjkBMFhNj_g/hqdefault.jpg) 0:59:48

0:59:48

0:47:38

0:47:38

1:05:24

1:05:24

0:05:29

0:05:29

0:42:00

0:42:00

0:24:07

0:24:07

0:06:46

0:06:46

0:32:46

0:32:46

1:17:52

1:17:52

0:16:23

0:16:23

![[OpenAI, JHU] Scaling](https://i.ytimg.com/vi/uz4qvNYUfJc/hqdefault.jpg) 0:10:54

0:10:54

0:15:03

0:15:03

0:13:15

0:13:15

1:23:01

1:23:01