filmov

tv

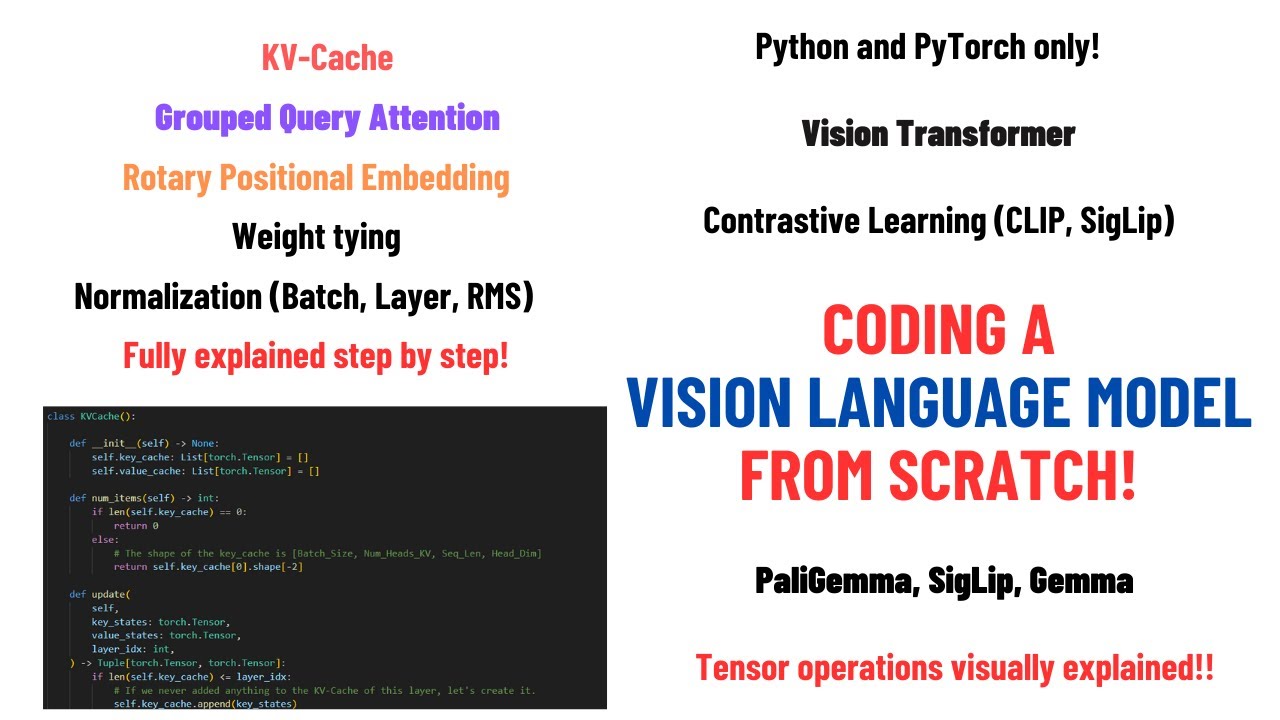

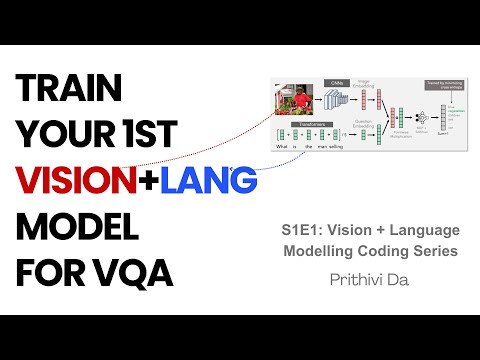

Coding a Multimodal (Vision) Language Model from scratch in PyTorch with full explanation

Показать описание

Full coding of a Multimodal (Vision) Language Model from scratch using only Python and PyTorch.

We will be coding the PaliGemma Vision Language Model from scratch while explaining all the concepts behind it:

- Transformer model (Embeddings, Positional Encoding, Multi-Head Attention, Feed Forward Layer, Logits, Softmax)

- Vision Transformer model

- Contrastive learning (CLIP, SigLip)

- Numerical stability of the Softmax and the Cross Entropy Loss

- Rotary Positional Embedding

- Multi-Head Attention

- Grouped Query Attention

- Normalization layers (Batch, Layer and RMS)

- KV-Cache (prefilling and token generation)

- Attention masks (causal and non-causal)

- Weight tying

- Top-P Sampling and Temperature

and much more!

All the topics will be explained using materials developed by me. For the Multi-Head Attention I have also drawn all the tensor operations that we do with the code so that we can have a visual representation of what happens under the hood.

Prerequisites:

🚀🚀 Join Writer 🚀🚀

Writer is the full-stack generative AI platform for enterprises. We make it easy for organizations to deploy AI apps and workflows that deliver impactful ROI.

We train our own models and we are looking for amazing researchers to join us!

Chapters

00:00:00 - Introduction

00:05:52 - Contrastive Learning and CLIP

00:16:50 - Numerical stability of the Softmax

00:23:00 - SigLip

00:26:30 - Why a Contrastive Vision Encoder?

00:29:13 - Vision Transformer

00:35:38 - Coding SigLip

00:54:25 - Batch Normalization, Layer Normalization

01:05:28 - Coding SigLip (Encoder)

01:16:12 - Coding SigLip (FFN)

01:20:45 - Multi-Head Attention (Coding + Explanation)

02:15:40 - Coding SigLip

02:18:30 - PaliGemma Architecture review

02:21:19 - PaliGemma input processor

02:40:56 - Coding Gemma

02:43:44 - Weight tying

02:46:20 - Coding Gemma

03:08:54 - KV-Cache (Explanation)

03:33:35 - Coding Gemma

03:52:05 - Image features projection

03:53:17 - Coding Gemma

04:02:45 - RMS Normalization

04:09:50 - Gemma Decoder Layer

04:12:44 - Gemma FFN (MLP)

04:16:02 - Multi-Head Attention (Coding)

04:18:30 - Grouped Query Attention

04:38:35 - Multi-Head Attention (Coding)

04:43:26 - KV-Cache (Coding)

04:47:44 - Multi-Head Attention (Coding)

04:56:00 - Rotary Positional Embedding

05:23:40 - Inference code

05:32:50 - Top-P Sampling

05:40:40 - Inference code

05:43:40 - Conclusion

We will be coding the PaliGemma Vision Language Model from scratch while explaining all the concepts behind it:

- Transformer model (Embeddings, Positional Encoding, Multi-Head Attention, Feed Forward Layer, Logits, Softmax)

- Vision Transformer model

- Contrastive learning (CLIP, SigLip)

- Numerical stability of the Softmax and the Cross Entropy Loss

- Rotary Positional Embedding

- Multi-Head Attention

- Grouped Query Attention

- Normalization layers (Batch, Layer and RMS)

- KV-Cache (prefilling and token generation)

- Attention masks (causal and non-causal)

- Weight tying

- Top-P Sampling and Temperature

and much more!

All the topics will be explained using materials developed by me. For the Multi-Head Attention I have also drawn all the tensor operations that we do with the code so that we can have a visual representation of what happens under the hood.

Prerequisites:

🚀🚀 Join Writer 🚀🚀

Writer is the full-stack generative AI platform for enterprises. We make it easy for organizations to deploy AI apps and workflows that deliver impactful ROI.

We train our own models and we are looking for amazing researchers to join us!

Chapters

00:00:00 - Introduction

00:05:52 - Contrastive Learning and CLIP

00:16:50 - Numerical stability of the Softmax

00:23:00 - SigLip

00:26:30 - Why a Contrastive Vision Encoder?

00:29:13 - Vision Transformer

00:35:38 - Coding SigLip

00:54:25 - Batch Normalization, Layer Normalization

01:05:28 - Coding SigLip (Encoder)

01:16:12 - Coding SigLip (FFN)

01:20:45 - Multi-Head Attention (Coding + Explanation)

02:15:40 - Coding SigLip

02:18:30 - PaliGemma Architecture review

02:21:19 - PaliGemma input processor

02:40:56 - Coding Gemma

02:43:44 - Weight tying

02:46:20 - Coding Gemma

03:08:54 - KV-Cache (Explanation)

03:33:35 - Coding Gemma

03:52:05 - Image features projection

03:53:17 - Coding Gemma

04:02:45 - RMS Normalization

04:09:50 - Gemma Decoder Layer

04:12:44 - Gemma FFN (MLP)

04:16:02 - Multi-Head Attention (Coding)

04:18:30 - Grouped Query Attention

04:38:35 - Multi-Head Attention (Coding)

04:43:26 - KV-Cache (Coding)

04:47:44 - Multi-Head Attention (Coding)

04:56:00 - Rotary Positional Embedding

05:23:40 - Inference code

05:32:50 - Top-P Sampling

05:40:40 - Inference code

05:43:40 - Conclusion

Комментарии

5:46:05

5:46:05

0:06:44

0:06:44

0:13:08

0:13:08

0:09:10

0:09:10

0:11:19

0:11:19

0:12:19

0:12:19

0:33:33

0:33:33

0:22:04

0:22:04

2:12:18

2:12:18

0:34:00

0:34:00

0:48:55

0:48:55

0:49:05

0:49:05

0:48:07

0:48:07

0:22:14

0:22:14

0:34:22

0:34:22

0:13:21

0:13:21

![[1hr Talk] Intro](https://i.ytimg.com/vi/zjkBMFhNj_g/hqdefault.jpg) 0:59:48

0:59:48

0:05:34

0:05:34

1:31:14

1:31:14

0:09:11

0:09:11

![[CVPR2023 Tutorial Talk]](https://i.ytimg.com/vi/Wb5ZkZUNYc4/hqdefault.jpg) 0:26:07

0:26:07

0:25:43

0:25:43

0:00:48

0:00:48

0:34:27

0:34:27