filmov

tv

5.3 Prox Gradient -- Rates of Convergence

Показать описание

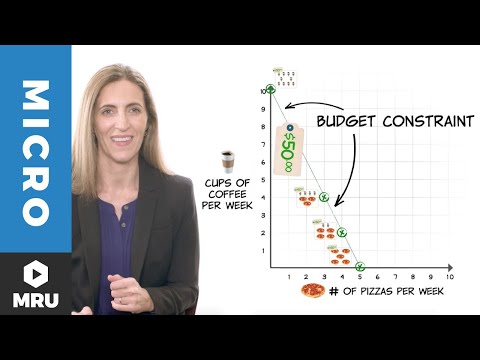

Budget Constraints

Break even analysis

Machine Learning Tutorial Python - 4: Gradient Descent and Cost Function

Change in Demand vs Change in Quantity Demanded- Key Concept

11.4 Convergence of Prox Point Algorithm

Linear Demand Equations - part 1(NEW 2016)

How to Find Roof Pitch in Less than a Minute

The Demand Curve

Stochastic Gradient Descent vs Batch Gradient Descent vs Mini Batch Gradient Descent |DL Tutorial 14

Operations Research 05A: Sensitivity Analysis & Shadow Price

NEW Continental GP5000 S TR Tyres | Faster, lighter, more puncture resistant!

How to Calculate Equilibrium Price and Quantity (Demand and Supply)

The BEST Road Bike Tyre in 2022! | Continental GP5000S TR Review

Gain Weight Naturally up to 5 Times Faster with this simple Home Remedy.

2. Preferences and Utility Functions

Machine Learning Tutorial Python - 17: L1 and L2 Regularization | Lasso, Ridge Regression

Utility Maximizing Bundle

The Supply Curve

8. Theory of Debt, Its Proper Role, Leverage Cycles

5 Ways to Get Bubbles Out of Resin | Resin ART

AMAZING DIY IDEAS FROM EPOXY RESIN || 22 easy epoxy resin crafts and jewelry

Vectors | Chapter 1, Essence of linear algebra

5 Reasons for Unfair Models | Proxy Variables, Unbalanced Samples & Negative Feedback Loops

The BEST Guide to CHART PATTERNS Price Action

Комментарии

0:06:46

0:06:46

0:03:35

0:03:35

0:28:26

0:28:26

0:01:47

0:01:47

0:38:54

0:38:54

0:10:28

0:10:28

0:00:58

0:00:58

0:03:31

0:03:31

0:36:47

0:36:47

0:07:09

0:07:09

0:08:32

0:08:32

0:06:08

0:06:08

0:08:43

0:08:43

0:02:48

0:02:48

0:41:24

0:41:24

0:19:21

0:19:21

0:09:26

0:09:26

0:02:55

0:02:55

1:15:17

1:15:17

0:08:37

0:08:37

0:10:11

0:10:11

0:09:52

0:09:52

0:10:09

0:10:09

0:09:09

0:09:09