filmov

tv

LLM Jargons Explained: Part 3 - Sliding Window Attention

Показать описание

In this video, I thoroughly explore Sliding Window Attention (SWA), a technique employed to train Large Language Models (LLMs) effectively on longer documents. This concept was extensively discussed in the Longformer paper and has also been recently utilized by Mistral 7B, leading to reduced computational costs.

_______________________________________________________

_______________________________________________________

Follow me on:

_______________________________________________________

_______________________________________________________

Follow me on:

LLM Jargons Explained: Part 3 - Sliding Window Attention

LLM Jargons Explained

LLM Jargons Explained: Part 2 - Multi Query & Group Query Attent

LLM Explained | What is LLM

How large language models work, a visual intro to transformers | Chapter 5, Deep Learning

Vectoring Words (Word Embeddings) - Computerphile

Bayes theorem, the geometry of changing beliefs

How ChatGPT Works Technically | ChatGPT Architecture

Multi Head Attention Part 2: Entire mathematics explained

what it’s like to work at GOOGLE…

Illustrated Guide to Transformers Neural Network: A step by step explanation

Ultimate LLM Guide: From Theory to Practice - Part 3 Introduction - LLM Family, Transformer

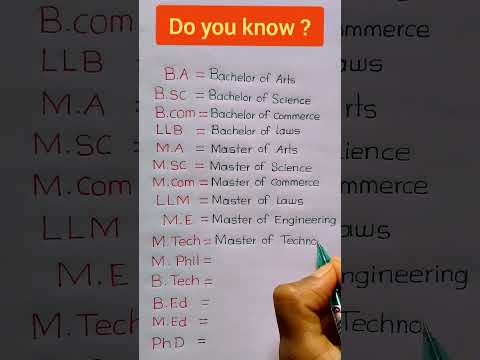

B.A/B.Sc/B.Com/LLB/M.A/M.sc/M.com/LLM/M.E/M.Tech/PhD Full Form

What is an API (in 5 minutes)

LLM vs NLP | Kevin Johnson

What are the LLM’s Top-P + Top-K ?

LLM Fine Tuning - Explained!

But what is a neural network? | Chapter 1, Deep learning

Anthropic's new improved RAG: Explained (for all LLM)

Machine Learning | What Is Machine Learning? | Introduction To Machine Learning | 2024 | Simplilearn

What Is DBT and Why Is It So Popular - Intro To Data Infrastructure Part 3

Mastering AI Jargon - Your Guide to OpenAI & LLM Terms

What is international law? An animated explainer

AI inbreeding ouroboros #language #linguistics #ai #llm #chatgpt #openai #etymology

Комментарии

0:15:22

0:15:22

0:02:04

0:02:04

0:15:51

0:15:51

0:04:17

0:04:17

0:27:14

0:27:14

0:16:56

0:16:56

0:15:11

0:15:11

0:07:54

0:07:54

1:01:13

1:01:13

0:00:25

0:00:25

0:15:01

0:15:01

0:13:16

0:13:16

0:00:52

0:00:52

0:04:56

0:04:56

0:10:36

0:10:36

0:06:00

0:06:00

0:23:07

0:23:07

0:18:40

0:18:40

0:33:54

0:33:54

0:07:52

0:07:52

0:09:48

0:09:48

0:07:07

0:07:07

0:01:37

0:01:37

0:01:00

0:01:00