filmov

tv

Value Iteration Algorithm - Dynamic Programming Algorithms in Python (Part 9)

Показать описание

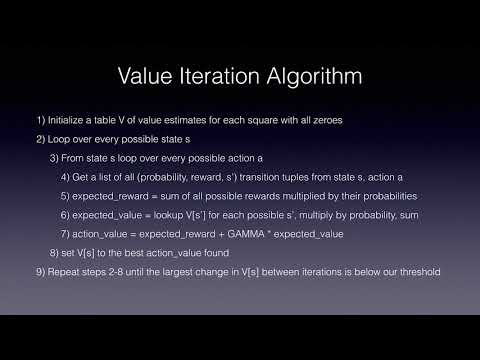

In this video, we show how to code value iteration algorithm in Python.

This video series is a Dynamic Programming Algorithms tutorial for beginners. It includes several dynamic programming problems and shows how to code them in Python. It is also a great Python tutorial for beginners to learn algorithms.

❔Answers to

↪︎ What is Markov decision process (MDP)?

↪︎ How can I solve Markov decision process problem by using value iteration algorithm?

↪︎ How can I code value iteration algorithm?

⌚ Content:

↪︎ 0:00 - Intro

↪︎ 0:24 - Explanation of Markov decision process

↪︎ 1:09 - Value iteration algorithm to solve an MDP problem

↪︎ 2:16 - How to code value iteration algorithm

↪︎ 4:17 - An example of MDP: Gambler's problem

🌎 Follow Coding Perspective:

🎥 Video series:

This video series is a Dynamic Programming Algorithms tutorial for beginners. It includes several dynamic programming problems and shows how to code them in Python. It is also a great Python tutorial for beginners to learn algorithms.

❔Answers to

↪︎ What is Markov decision process (MDP)?

↪︎ How can I solve Markov decision process problem by using value iteration algorithm?

↪︎ How can I code value iteration algorithm?

⌚ Content:

↪︎ 0:00 - Intro

↪︎ 0:24 - Explanation of Markov decision process

↪︎ 1:09 - Value iteration algorithm to solve an MDP problem

↪︎ 2:16 - How to code value iteration algorithm

↪︎ 4:17 - An example of MDP: Gambler's problem

🌎 Follow Coding Perspective:

🎥 Video series:

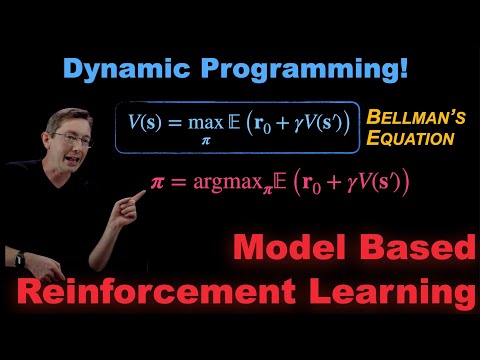

Model Based Reinforcement Learning: Policy Iteration, Value Iteration, and Dynamic Programming

Solve Markov Decision Processes with the Value Iteration Algorithm - Computerphile

Value Iteration Algorithm - Dynamic Programming Algorithms in Python (Part 9)

Bellman Equations, Dynamic Programming, Generalized Policy Iteration | Reinforcement Learning Part 2

RL 6: Policy iteration and value iteration - Reinforcement learning

How to use Bellman Equation Reinforcement Learning | Bellman Equation Machine Learning Mahesh Huddar

Bellman Equation - Explained!

4 Steps to Solve Any Dynamic Programming (DP) Problem

Markov Decision Process (MDP) - 5 Minutes with Cyrill

Value Iteration in Deep Reinforcement Learning

27. Value Iteration || End to End AI Tutorial

Value Iteration Visualization.

2.02 Dynamic Programming: Value Iteration

Dynamic Programming Tutorial for Reinforcement Learning

Value Iteration and Q-Learning Reinforcement Learning Algorithms

Optimal Policies and Value Iteration

Value Iteration (tutorial)

Value Iteration and Policy Iteration - Model Based Reinforcement Learning Method - Machine Learning

RTDP | Real Time Dynamic Programming

Value Iteration in POMDPs - 1

Value Iteration

Reinforcement Learning: Value Iteration

Dynamic Programming lecture #1 - Fibonacci, iteration vs recursion

2110593 Reinforcement Learning L 2 - MDP, Policy Iteration, Value iteration, Dynamic Programming

Комментарии

0:27:10

0:27:10

0:38:02

0:38:02

0:07:08

0:07:08

0:21:33

0:21:33

0:26:06

0:26:06

0:10:25

0:10:25

0:09:05

0:09:05

0:00:57

0:00:57

0:03:36

0:03:36

0:16:50

0:16:50

0:06:13

0:06:13

0:01:00

0:01:00

0:28:15

0:28:15

0:10:24

0:10:24

0:04:53

0:04:53

0:20:02

0:20:02

0:27:38

0:27:38

0:10:53

0:10:53

0:16:45

0:16:45

0:05:10

0:05:10

0:08:41

0:08:41

0:23:03

0:23:03

0:19:47

0:19:47

2:37:34

2:37:34