filmov

tv

Value Iteration and Policy Iteration - Model Based Reinforcement Learning Method - Machine Learning

Показать описание

Model Based Reinforcement Learning

In model-based reinforcement learning algorithm, the environment is modelled as a Markov Decision Process (MDS) with following elements:

* A set of states

* A set of actions available in each state

* Transition probability function from current state (st) to next state (st+1) under action a

* Reward function: reward received on transition from current state (st) to next state (st+1) under action a

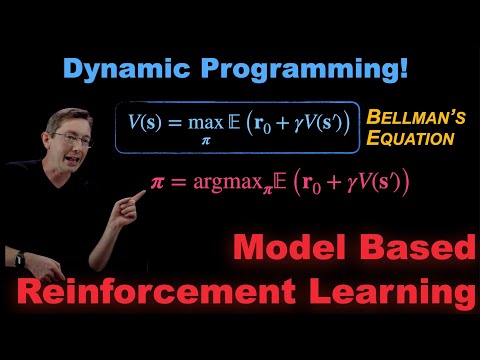

There are two common approaches to find optimal policy using recursive relation of Bellman Equation

1. Value Iteration: In this method, the optimal policy is obtained by iteratively computing the optimal state value function V(s) for each state until it converges. In this method policy function in not computed explicitly during iteration, rather optimal state value function is computed by choosing the action that maximizes Q value for a given state.

Algorithm

2. Policy Iteration: In this method, we start with a baseline policy and improve it iteratively to obtain optimal policy. There are two steps

1. Policy evaluation: in this step we evaluate the value function for current policy

2. Policy improvement: in this step policy is improved at each step by selecting the action that maximizes the Q value.

Algorithm

Shortcomings of Value Iteration and Policy Iteration Methods

1. These methods are computationally feasible only for finite small Markov Decision Processes, i.e., small number of time steps and small number of states.

2. These methods cannot be used for games or processes where model of environment, i.e., Markov Decision Process, is not known beforehand. Rather than the model we are given a simulation model of the environment and the only way to collect information about the environment is by interacting with it.

If the model of the environment is not known then Model Free Reinforcement Learning Techniques can be used.

Monte Carlo Method

This is a Model Free Reinforcement Learning Technique. It can be used where we are given a simulation model of the environment and the only way to collect information about the environment is by interacting with it.

This method works along the lines of policy iteration method. There are two steps

1. Policy evaluation: in this method estimate of action value function ( Q value) for each state action pair (s, a) for a given policy is computed by averaging the sampled returns that originate from (s, a) over time. Given sufficient time this procedure can construct precise estimates of Q(s, a) for all state action pairs.

2. Policy improvement: Improved policy is obtained by a greedy approach with respect to Q; given a state s new policy is the action that maximizes Q(s, a) obtained in step 1.

Monte Carlo Method Algorithm

Shortcomings of Monte Carlo Method

There are many shortcomings of Monte Carlo Method

1. it is feasible for games with small number of states, actions and steps.

2. sample are used inefficiently as long trajectory improves only one state action pair

3. procedure may spend too much time evaluating suboptimal policies

4. it works for episodic problems only

Комментарии

0:27:10

0:27:10

0:10:53

0:10:53

0:26:06

0:26:06

0:16:50

0:16:50

0:21:33

0:21:33

0:33:05

0:33:05

0:10:18

0:10:18

0:03:36

0:03:36

0:20:02

0:20:02

0:10:25

0:10:25

0:12:36

0:12:36

0:16:44

0:16:44

0:24:57

0:24:57

0:01:00

0:01:00

0:04:53

0:04:53

0:04:02

0:04:02

0:00:59

0:00:59

0:21:14

0:21:14

0:07:31

0:07:31

0:10:15

0:10:15

0:04:11

0:04:11

0:15:06

0:15:06

0:35:45

0:35:45

0:20:16

0:20:16