filmov

tv

Reliable Interpretability - Explaining AI Model Predictions | Sara Hooker @PyBay2018

Показать описание

Abstract

How can we explain how deep neural networks arrive at decisions? Feature representation is complex and to the human eye opaque; instead a set of interpretability tools intuit what the model has learned by looking at what inputs it pays attention to. This talk will introduce some of the challenges associated with identifying these salient inputs and discuss desirable properties methods should fulfill in order to build trust between humans and algorithm.

Speaker Bio

Sara Hooker is a researcher at Google Brain doing deep learning research on reliable explanations of model predictions for black-box models. Her main research interests gravitate towards interpretability, model compression and security. In 2014, she founded Delta Analytics, a non-profit dedicated to bringing technical capacity to help non-profits across the world use machine learning for good. She grew up in Africa, in Mozambique, Lesotho, Swaziland, South Africa, and Kenya. Her family now lives in Monrovia, Liberia.

This and other PyBay2018 videos are brought to you by our Gold Sponsor Cisco!

Reliable Interpretability - Explaining AI Model Predictions | Sara Hooker @PyBay2018

Interpretability Beyond Feature Attribution

Explainable AI explained! | #3 LIME

25. Interpretability

Explaining AI

Introduction to Explainable AI (ML Tech Talks)

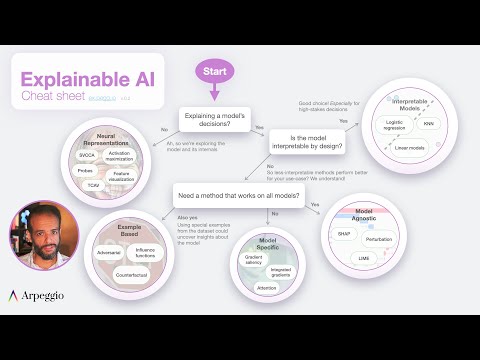

Explainable AI Cheat Sheet - Five Key Categories

Why Large Language Models Hallucinate

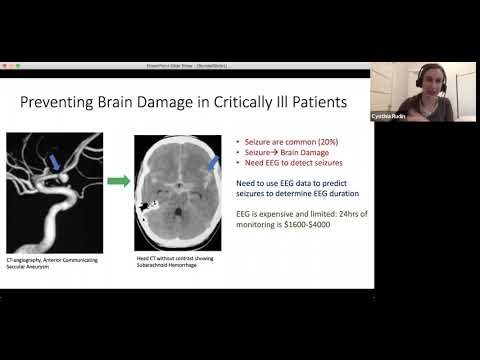

Stanford Seminar - ML Explainability Part 1 I Overview and Motivation for Explainability

Building Explainable Machine Learning Systems: The Good, the Bad, and the Ugly

Explainable AI explained! | #1 Introduction

Interpretability vs. Explainability in Machine Learning

What Is Explainable AI? | Explainable vs Interpretable Machine Learning

Counterfactual Explanations: The Future of Explainable AI | Aviv Ben Arie

SHAP values for beginners | What they mean and their applications

Explainable AI explained! | #2 By-design interpretable models with Microsofts InterpretML

Why AI cannot be explained | Rickard Brüel-Gabrielsson | TEDxBoston

Making AI more trusted, by making it explainable.

Explainable AI for Science and Medicine

Explainable AI explained! | #4 SHAP

USENIX Security '18-Q: Why Do Keynote Speakers Keep Suggesting That Improving Security Is Possi...

#047 Interpretable Machine Learning - Christoph Molnar

MIT 6.S191 (2020): Neurosymbolic AI

AI 101 with Brandon Leshchinskiy

Комментарии

0:45:23

0:45:23

0:27:56

0:27:56

0:13:59

0:13:59

1:18:42

1:18:42

0:16:27

0:16:27

0:45:45

0:45:45

0:14:09

0:14:09

0:09:38

0:09:38

0:28:07

0:28:07

1:01:12

1:01:12

0:06:53

0:06:53

1:14:26

1:14:26

0:08:31

0:08:31

0:27:13

0:27:13

0:07:07

0:07:07

0:20:54

0:20:54

0:06:57

0:06:57

0:01:20

0:01:20

1:15:29

1:15:29

0:15:50

0:15:50

0:51:22

0:51:22

1:40:22

1:40:22

0:41:10

0:41:10

0:40:41

0:40:41