filmov

tv

Explainable AI explained! | #3 LIME

Показать описание

▬▬ Resources ▬▬▬▬▬▬▬▬▬▬▬

▬▬ Timestamps ▬▬▬▬▬▬▬▬▬▬▬

00:00 Introduction

02:31 Paper explanation

03:34 The math / formula

08:35 LIME in practice

▬▬ Support me if you like 🌟

▬▬ Timestamps ▬▬▬▬▬▬▬▬▬▬▬

00:00 Introduction

02:31 Paper explanation

03:34 The math / formula

08:35 LIME in practice

▬▬ Support me if you like 🌟

Explainable AI explained! | #3 LIME

Understanding Convolutional Neural Networks | Part 3 / 3 - Transfer Learning and Explainable AI

Explainable AI, Session 3: Explainability Options

What is Explainable AI?

Explainable AI explained! | #2 By-design interpretable models with Microsofts InterpretML

Explainable AI explained! | #1 Introduction

Explainable AI with Shapley Values (Part 3: KernelSHAP)

Explainable AI explained! | #4 SHAP

Generative AI in 2 hours | LLM Basics, RAGs, Prompt Engineering | Satyajit Pattnaik

Stanford Seminar - ML Explainability Part 3 I Post hoc Explanation Methods

Introduction to Explainable AI (ML Tech Talks)

What Is Explainable AI?

What is Explainable AI?

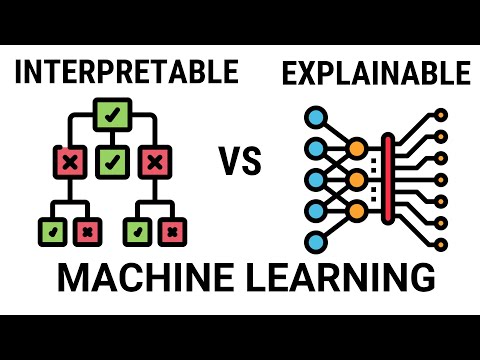

Interpretable vs Explainable Machine Learning

Sally Radwan - What does Explainable AI Really Mean? [PWL NYC]

AI Explained: A Data Scientist’s Guide to Explainable AI

Probing Classifiers: A Gentle Intro (Explainable AI for Deep Learning)

Understanding LIME | Explainable AI

An Explanation of What, Why, and how of Explainable AI (XAI) | Bahador Khaleghi

What is Artificial Intelligence? | Artificial Intelligence In 5 Minutes | AI Explained | Simplilearn

A Guide to Explainable AI | Artificial Intelligence Masterclass for Beginners

Explainable AI Lab

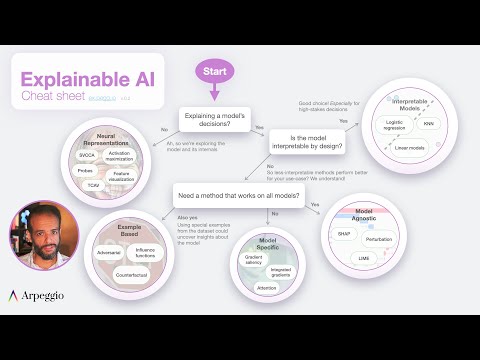

Explainable AI Cheat Sheet - Five Key Categories

Explainable AI, Session 4: Intro to LIME

Комментарии

0:13:59

0:13:59

0:25:33

0:25:33

0:06:08

0:06:08

0:07:30

0:07:30

0:20:54

0:20:54

0:06:53

0:06:53

0:02:51

0:02:51

0:15:50

0:15:50

2:09:29

2:09:29

1:12:37

1:12:37

0:45:45

0:45:45

0:03:46

0:03:46

0:05:02

0:05:02

0:07:07

0:07:07

0:19:14

0:19:14

1:00:36

1:00:36

0:11:26

0:11:26

0:14:14

0:14:14

0:26:37

0:26:37

0:04:45

0:04:45

0:39:11

0:39:11

2:06:32

2:06:32

0:14:09

0:14:09

0:09:16

0:09:16