filmov

tv

Neural Ordinary Differential Equations

Показать описание

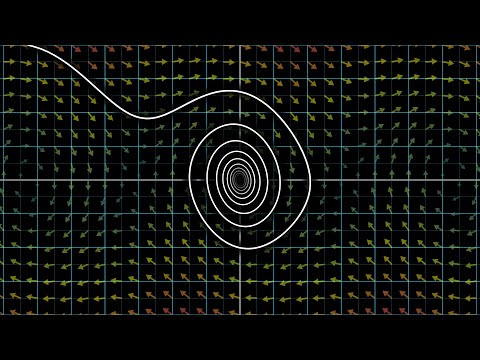

This talk is based on the first part of the paper "Neural ordinary differential equations". Authors introduce a concept of residual networks with continuous-depth, what they consider as ordinary differential equations (ODEs). Correspondingly, inputs of neural networks are considered as an initial state of ODEs, and outputs as a solution obtained by ODE solver. One of the main advantages of such approach is the constant memory cost with respect to the model depth. However, training of such networks requires introduction of adjoint function (standard technique from optimal control theory). One of the curious points is that solving of ODEs for the adjoint function can be considered as continuous analog of backpropagation.

Neural Ordinary Differential Equations

Neural ODEs (NODEs) [Physics Informed Machine Learning]

Neural ordinary differential equations - NODEs (DS4DS 4.07)

Neural Ordinary Differential Equations

ODE | Neural Ordinary Differential Equations - Best Paper Awards NeurIPS

Neural Differential Equations

Bayesian Neural Ordinary Differential Equations | Raj Dandekar | JuliaCon2021

David Duvenaud: Neural Ordinary Equations

Physics Informed Neural Networks | Theory and Application

Neural Ordinary Differential Equations

Olof Mogren: Neural ordinary differential equations

Fitting Neural Ordinary Differential Equations With DiffeqFlux.jl | Elisabeth Roesch | JuliaCon 2019

Programming for AI (AI504, Fall 2020), Class 14: Neural Ordinary Differential Equations

Neural Ordinary Differential Equations With DiffEqFlux | Jesse Bettencourt | JuliaCon 2019

Neural Ordinary Differential Equations with David Duvenaud - #364

Neural Ordinary Differential Equation

Differential equations, a tourist's guide | DE1

[Paper Review] Neural Ordinary Differential Equations (Neural ODE)

Neural ordinary differential equations

Programming for AI (AI504, Fall 2020), Practice 14: Neural Ordinary Differential Equations

Neural ordinary differential equations

Bayesian Neural Ordinary Differential Equations

On Neural Differential Equations

Anamnesic Neural Differential Equations with Orthogonal Polynomial Projections

Комментарии

0:22:19

0:22:19

0:24:37

0:24:37

0:18:16

0:18:16

0:35:33

0:35:33

0:12:00

0:12:00

0:35:18

0:35:18

0:23:20

0:23:20

0:30:51

0:30:51

1:25:55

1:25:55

0:45:31

0:45:31

0:36:38

0:36:38

0:29:29

0:29:29

1:19:35

1:19:35

0:14:29

0:14:29

0:48:54

0:48:54

0:03:42

0:03:42

0:27:16

0:27:16

![[Paper Review] Neural](https://i.ytimg.com/vi/UegW1cIRee4/hqdefault.jpg) 0:39:56

0:39:56

0:36:38

0:36:38

0:30:10

0:30:10

0:36:38

0:36:38

0:11:32

0:11:32

1:06:36

1:06:36

0:24:25

0:24:25