filmov

tv

David Duvenaud | Reflecting on Neural ODEs | NeurIPS 2019

Показать описание

Summary:

We introduce a new family of deep neural network models. Instead of specifying a discrete sequence of hidden layers, we parameterize the derivative of the hidden state using a neural network. The output of the network is computed using a black-box differential equation solver. These continuous-depth models have constant memory cost, adapt their evaluation strategy to each input, and can explicitly trade numerical precision for speed. We demonstrate these properties in continuous-depth residual networks and continuous-time latent variable models. We also construct continuous normalizing flows, a generative model that can train by maximum likelihood, without partitioning or ordering the data dimensions. For training, we show how to scalably backpropagate through any ODE solver, without access to its internal operations. This allows end-to-end training of ODEs within larger models.

David Duvenaud | Reflecting on Neural ODEs | NeurIPS 2019

David Duvenaud - A farewell to GPs: A roadmap for ultra-scalable simulation and inference in SPDEs

David Duvenaud: Neural Ordinary Equations

CAIDA Talk - Dec 6, 2019 - David Duvenaud

David Duvenaud | Explaining decisions by generating counterfactuals

David Duvenaud: Self-tuning Gradient Estimators for Discrete Random Variables

David Duvenaud (U of T) --Latent Stochastic Differential Equations

David Duvenaud: When should we make our models continuous in time?

Latent Stochastic Differential Equations for Irregularly-Sampled Time Series - David Duvenaud

Neural Ordinary Differential Equations with David Duvenaud - #364

ODE | Neural Ordinary Differential Equations - Best Paper Awards NeurIPS

David Duvenaud - Handling messy time series with large latent-variable models

Composing Graphical Models with Neural Networks for Structured Representations and Fast Inference

Composing Graphical Models With Neural Networks, w/ David Duvenaud - #96

MIA Special Seminar: David Duvenaud, It's time to talk about irregularly-sampled time series

BrainODE: Dynamic Brain Signal Analysis via Graph-Aided Neural Ordinary Differential Equ

Will Grathwohl: Scalable Reversible Generative Models with Free-form Continuous Dynamics

Neural Ordinary Differential Equation

NeurIPS 2020 Tutorial: Deep Implicit Layers

NeurIPS 2019 | Track 3 Session 1

Recurrence without Recurrence: Stable Video Landmark Detection with Deep Equilibrium Models CVPR2023

Interpretable Comparison of Distributions and Models | NeurIPS 2019

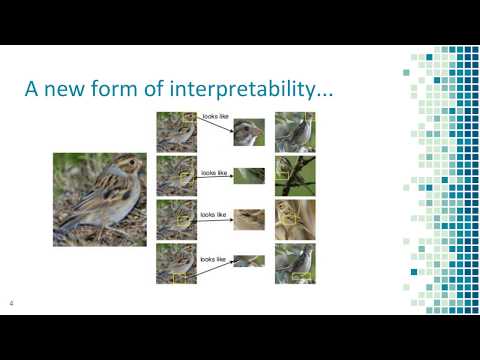

This Looks Like That: Deep Learning for Interpretable Image Recognition (NeurIPS 2019)

ODE2VAE: Deep generative second order ODEs with Bayesian neural networks

Комментарии

0:21:02

0:21:02

1:00:36

1:00:36

0:30:51

0:30:51

0:58:49

0:58:49

1:19:44

1:19:44

0:35:36

0:35:36

0:51:51

0:51:51

1:02:46

1:02:46

1:05:28

1:05:28

0:48:54

0:48:54

0:12:00

0:12:00

1:01:31

1:01:31

0:41:36

0:41:36

0:37:32

0:37:32

0:54:43

0:54:43

0:12:27

0:12:27

0:19:52

0:19:52

0:03:42

0:03:42

1:51:35

1:51:35

0:39:24

0:39:24

0:05:37

0:05:37

1:58:40

1:58:40

0:02:58

0:02:58

0:02:25

0:02:25