filmov

tv

How DPO Works and Why It's Better Than RLHF

Показать описание

This week we cover the "Direct Preference Optimization: Your Language Model is Secretly a Reward Model" paper from Stanford. This paper shows how you can remove the need of training a tricky, separate reward model by using a DPO-optimized LLM instead.

--

--

Training Language Models to Follow Instructions 📖

--

--

Training Language Models to Follow Instructions 📖

Direct Preference Optimization: Your Language Model is Secretly a Reward Model | DPO paper explained

How DPO Works and Why It's Better Than RLHF

Reinforcement Learning from Human Feedback (RLHF) & Direct Preference Optimization (DPO) Explain...

Data Protection Officer's (#DPO) Roles & Responsibilities in An Organizations

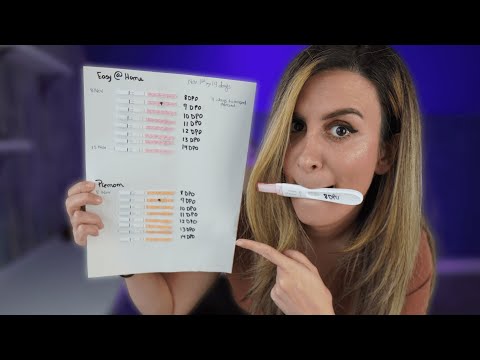

Pregnancy Test Line Progression | Positive at 8 DPO- 14 DPO | Cheapest Early Detection Tests

36.5 Understanding how to calculate Days DSO, DPO, and DIO

TWO WEEK WAIT SYMPTOMS | How I Knew I Was Pregnant

Are early pregnancy symptoms possible before 10dpo?

Direct Preference Optimization (DPO) in AI

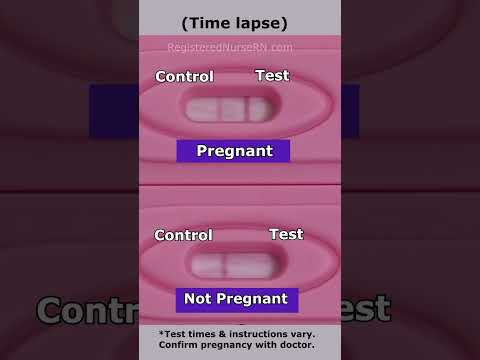

Positive Pregnancy TEST vs Negative in 30 SECONDS Time Lapse #shorts

Working at DPO International

What Data Protection Officer (DPO) Training and Certification are available?

Direct Preference Optimization (DPO): How It Works and How It Topped an LLM Eval Leaderboard

3 TIPS FOR GETTING PREGNANT ‣‣ how i got pregnant

Direct Preference Optimization (DPO): Your Language Model is Secretly a Reward Model Explained

What is DPO?

Direct Public Offering (DPO): Definition, How It Works, Examples

Implantation and Early Pregnancy Symptoms: How Early Can You Take a Pregnancy Test?

TWO WEEK WAIT SYMPTOMS // How I Knew I Was Pregnant - 1-14 DPO

Experience working with a Data Protection Office (DPO)

How-to Sign Up Instantly with DPO Pay

DPO Debate: Is RL needed for RLHF?

Difference Between RLHF and DPO in Simple Words

Direct Preference Optimization (DPO) explained: Bradley-Terry model, log probabilities, math

Комментарии

0:08:55

0:08:55

0:45:21

0:45:21

0:19:39

0:19:39

0:24:30

0:24:30

0:04:54

0:04:54

0:01:24

0:01:24

0:25:37

0:25:37

0:04:23

0:04:23

0:05:12

0:05:12

0:00:31

0:00:31

0:02:04

0:02:04

0:05:46

0:05:46

0:11:35

0:11:35

0:08:52

0:08:52

0:36:25

0:36:25

0:00:46

0:00:46

0:03:19

0:03:19

0:12:21

0:12:21

0:20:20

0:20:20

0:01:29

0:01:29

0:03:40

0:03:40

0:26:55

0:26:55

0:00:58

0:00:58

0:48:46

0:48:46