filmov

tv

AI/ML DC Design - Part 2

Показать описание

The 0x2 Nerds continue with Petr Lapukov for part 2 of their discussion on AI/ML data center design.

AI/ML DC Design - Part 2

AI/ML DC Design - Part 4

AI/ML Data Center Design - Part 1

Next-Generation Data Center Design | Alan Duong

Artificial intelligence

Keysight ADS 2025 Updates: AI/ML, 6G, Load Pull

+--400Vdc Rack Power System for ML AI Application

Mechanical Engineering Class at IIT BHU 🔥 | ED | #iit #iitbhu #shorts #viral #jee #mechanical

Amazing arduino project | Check description to get free money.

Arduino project how to make a laser electronic alarm, an amazing invention DIY

Altavalve: Transcatheter Mitral Valve Replacement (TMVR) #shorts #medical #animation

Kickstart AI in Your Data Center with Cisco Validated Designs

TRINITY BUILD vs CRITICAL BUILD Dyrroth

Vehicle Accident Control Project #science #tech #project #hack

Automatic sorting conveyor belt - Graduation project 2017 - Mechatronics | Egypt

NEWYES Calculator VS Casio calculator

IIT Bombay Lecture Hall | IIT Bombay Motivation | #shorts #ytshorts #iit

Gate Smashers hai na?? Agree🤝 #shorts #gatesmashers#youtubeshorts #trending #viral

manually writing data to a HDD...kinda #shorts

DOCTOR vs. NURSE: $ OVER 5 YEARS #shorts

Swedish Submachine Gun (SMG) | Carl Gustaf M/45 | How It Works

The Only Way You Should Add Blood To LEGO Minifigures #shorts

So you use Safari on your Mac...

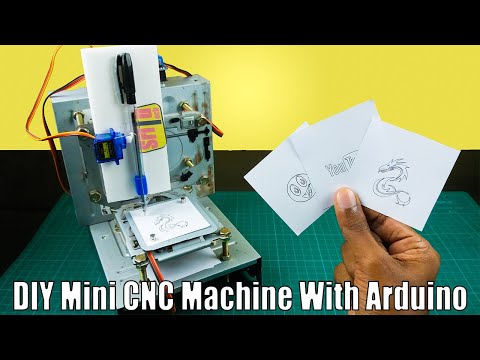

DIY mini Arduino CNC drawing machine

Комментарии

0:54:48

0:54:48

0:39:50

0:39:50

1:04:20

1:04:20

0:15:54

0:15:54

0:00:24

0:00:24

0:02:56

0:02:56

0:23:17

0:23:17

0:00:19

0:00:19

0:00:16

0:00:16

0:00:16

0:00:16

0:00:20

0:00:20

0:45:45

0:45:45

0:00:19

0:00:19

0:00:16

0:00:16

0:00:28

0:00:28

0:00:14

0:00:14

0:00:12

0:00:12

0:00:09

0:00:09

0:00:12

0:00:12

0:00:16

0:00:16

0:00:30

0:00:30

0:00:23

0:00:23

0:00:19

0:00:19

0:00:32

0:00:32