filmov

tv

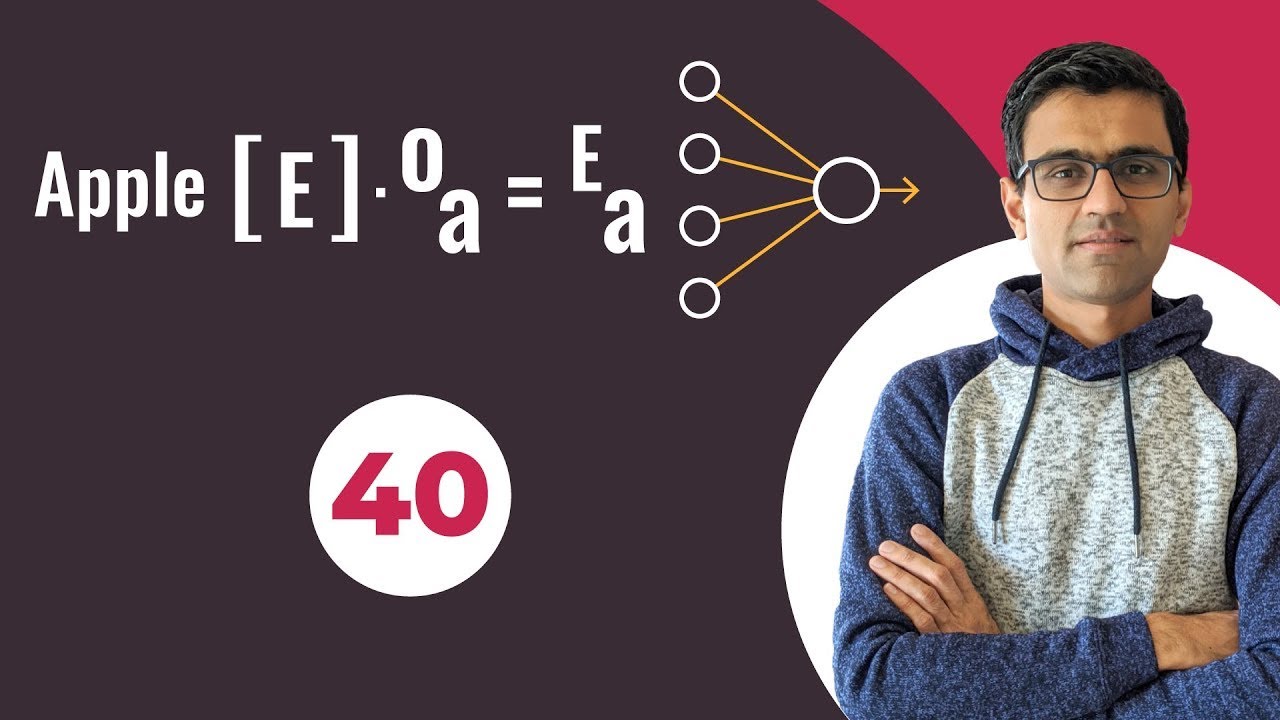

Word embedding using keras embedding layer | Deep Learning Tutorial 40 (Tensorflow, Keras & Python)

Показать описание

In this video we will discuss how exactly word embeddings are computed. There are two techniques for this (1) supervised learning (2) self supervised learning techniques such as word2vec, glove. In this tutorial we will look at the first technique of supervised learning. We will also write code for food review classification and see how word embeddings are calculated while solving that problem

🔖 Hashtags 🔖

#WordEmbeddingUsingKeras #WordEmbedding #EmbeddingLayerKeras #WordEmbeddingdeeplearning #WordembeddingswithKeras #wordembeddinginpython #wordembeddingpython #wordembeddingtensorflow

#️⃣ Social Media #️⃣

❗❗ DISCLAIMER: All opinions expressed in this video are of my own and not that of my employers'.

🔖 Hashtags 🔖

#WordEmbeddingUsingKeras #WordEmbedding #EmbeddingLayerKeras #WordEmbeddingdeeplearning #WordembeddingswithKeras #wordembeddinginpython #wordembeddingpython #wordembeddingtensorflow

#️⃣ Social Media #️⃣

❗❗ DISCLAIMER: All opinions expressed in this video are of my own and not that of my employers'.

Word embedding using keras embedding layer | Deep Learning Tutorial 40 (Tensorflow, Keras & Pyth...

Implementing Word Embedding Using Keras- NLP | Deep Learning

Word Embedding - Embedding using Word2vec library and Keras Embedding Method

What are Embedding Layers in Keras (11.5)

Word embeddings with *KERAS* Embedding layer in Python [NEW🔴]

Embedding Layer Keras | Embedding as a Layer | Word Embedding

244 - What are embedding layers in keras?

Training Your Own Word Embeddings With Keras

Python Word Embedding using Word2vec and keras|How to use word embedding in python

Word Embedding with Keras: A RStudio Tutorial

livestream using keras' embedding layer for first time

Word Embeddings || Embedding Layers || Quick Explained

Word Embedding and Word2Vec, Clearly Explained!!!

Word Embedding in Keras

#Python | Word Embedding & Embedding Layer | #WordEmbedding #DeepLearning #Tensorflow #Keras

What is Word2Vec? A Simple Explanation | Deep Learning Tutorial 41 (Tensorflow, Keras & Python)

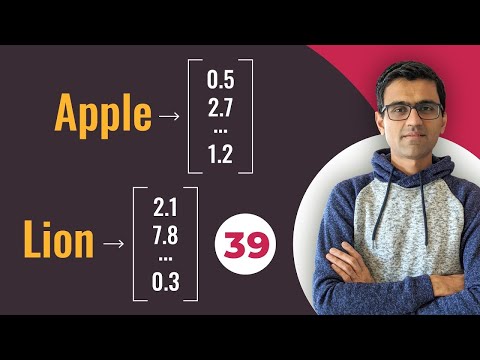

Converting words to numbers, Word Embeddings | Deep Learning Tutorial 39 (Tensorflow & Python)

A Complete Overview of Word Embeddings

Encoding and embedding using Keras in Python for NLP

Tensorflow 2.0 Tutorial - What is an Embedding Layer? Text Classification P2

NLP Tutorial 18 | word2vec Word Embedding with SpaCy

TRAIN WORD EMBEDDINGS ON OWN DATASET. TENSORFLOW EMBEDDING. W2VEC MODEL FROM SCRATCH. NLP PRACTICE

Contextual word embeddings in spaCy

Vectoring Words (Word Embeddings) - Computerphile

Комментарии

0:21:35

0:21:35

0:18:05

0:18:05

0:27:08

0:27:08

0:14:39

0:14:39

0:13:15

0:13:15

0:15:18

0:15:18

0:18:24

0:18:24

1:04:38

1:04:38

0:19:20

0:19:20

0:42:11

0:42:11

0:27:38

0:27:38

0:02:09

0:02:09

0:16:12

0:16:12

0:18:18

0:18:18

0:05:06

0:05:06

0:18:28

0:18:28

0:11:32

0:11:32

0:17:17

0:17:17

0:33:01

0:33:01

0:14:30

0:14:30

0:36:06

0:36:06

0:23:05

0:23:05

0:09:06

0:09:06

0:16:56

0:16:56