filmov

tv

Implementing Word Embedding Using Keras- NLP | Deep Learning

Показать описание

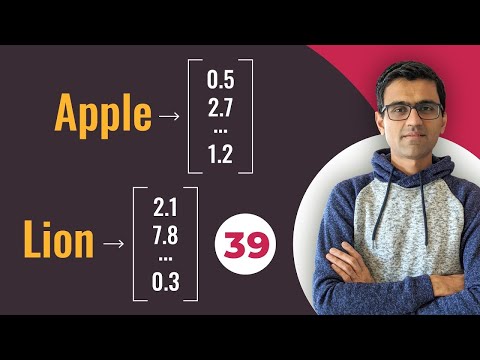

Word embeddings provide a dense representation of words and their relative meanings.They are an improvement over sparse representations used in simpler bag of word model representations.Word embeddings can be learned from text data and reused among projects. They can also be learned as part of fitting a neural network on text data.

Please join as a member in my channel to get additional benefits like materials in Data Science, live streaming for Members and many more

Please do subscribe my other channel too

Connect with me here:

Please join as a member in my channel to get additional benefits like materials in Data Science, live streaming for Members and many more

Please do subscribe my other channel too

Connect with me here:

Implementing Word Embedding Using Keras- NLP | Deep Learning

Word embedding using keras embedding layer | Deep Learning Tutorial 40 (Tensorflow, Keras & Pyth...

Training Your Own Word Embeddings With Keras

Python Word Embedding using Word2vec and keras|How to use word embedding in python

Word Embedding - Embedding using Word2vec library and Keras Embedding Method

Converting words to numbers, Word Embeddings | Deep Learning Tutorial 39 (Tensorflow & Python)

Embedding Layer Keras | Embedding as a Layer | Word Embedding

Word embeddings with *KERAS* Embedding layer in Python [NEW🔴]

TRAIN WORD EMBEDDINGS ON OWN DATASET. TENSORFLOW EMBEDDING. W2VEC MODEL FROM SCRATCH. NLP PRACTICE

What is Word2Vec? A Simple Explanation | Deep Learning Tutorial 41 (Tensorflow, Keras & Python)

244 - What are embedding layers in keras?

What are Embedding Layers in Keras (11.5)

Word Embedding with Keras: A RStudio Tutorial

Encoding and embedding using Keras in Python for NLP

Word Embedding in Keras

#Python | Word Embedding & Embedding Layer | #WordEmbedding #DeepLearning #Tensorflow #Keras

Word Embeddings || Embedding Layers || Quick Explained

livestream using keras' embedding layer for first time

A Complete Overview of Word Embeddings

Coding Word2Vec : Natural Language Processing

Creating a neural network for learning word embeddings

Implementation of RNN in Python using Keras, Word Embeddings and Introduction to LSTM- FDP - Day 16

NLP Tutorial 18 | word2vec Word Embedding with SpaCy

Day 9-Word Embedding Layer And LSTM Practical Implementation In NLP Application|Krish Naik

Комментарии

0:18:05

0:18:05

0:21:35

0:21:35

1:04:38

1:04:38

0:19:20

0:19:20

0:27:08

0:27:08

0:11:32

0:11:32

0:15:18

0:15:18

0:13:15

0:13:15

0:23:05

0:23:05

0:18:28

0:18:28

0:18:24

0:18:24

0:14:39

0:14:39

0:42:11

0:42:11

0:33:01

0:33:01

0:18:18

0:18:18

0:05:06

0:05:06

0:02:09

0:02:09

0:27:38

0:27:38

0:17:17

0:17:17

0:07:59

0:07:59

0:09:02

0:09:02

3:03:39

3:03:39

0:36:06

0:36:06

0:45:22

0:45:22