filmov

tv

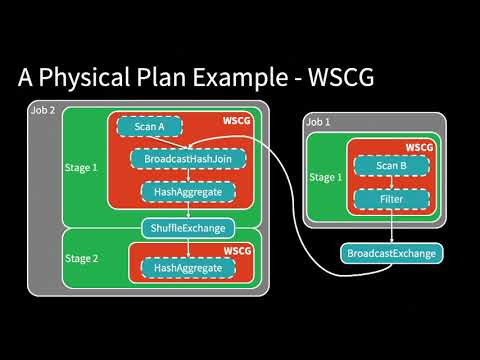

An Adaptive Execution Engine For Apache Spark SQL - Carson Wang

Показать описание

"Catalyst is an excellent optimizer in SparkSQL, provides open interface for rule-based optimization in planning stage. However, the static (rule-based) optimization will not consider any data distribution at runtime. A technology called Adaptive Execution has been introduced since Spark 2.0 and aims to cover this part, but still pending in early stage. We enhanced the existing Adaptive Execution feature, and focus on the execution plan adjustment at runtime according to different staged intermediate outputs, like set partition numbers for joins and aggregations, avoid unnecessary data shuffling and disk IO, handle data skew cases, and even optimize the join order like CBO etc.. In our benchmark comparison experiments, this feature save huge manual efforts in tuning the parameters like the shuffled partition number, which is error-prone and misleading. In this talk, we will expose the new adaptive execution framework, task scheduling, failover retry mechanism, runtime plan switching etc. At last, we will also share our experience of benchmark 100 -300 TB scale of TPCx-BB in a hundreds of bare metal Spark cluster.

Session hashtag: EUdev4"

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Session hashtag: EUdev4"

About: Databricks provides a unified data analytics platform, powered by Apache Spark™, that accelerates innovation by unifying data science, engineering and business.

Connect with us:

Комментарии

0:29:33

0:29:33

0:02:28

0:02:28

0:18:48

0:18:48

0:38:24

0:38:24

0:09:01

0:09:01

0:17:14

0:17:14

0:03:14

0:03:14

0:25:46

0:25:46

0:39:32

0:39:32

0:05:48

0:05:48

0:38:58

0:38:58

0:26:04

0:26:04

0:51:59

0:51:59

0:45:38

0:45:38

0:20:19

0:20:19

0:06:04

0:06:04

0:32:41

0:32:41

0:06:42

0:06:42

0:08:44

0:08:44

0:06:41

0:06:41

0:02:13

0:02:13

0:21:25

0:21:25

0:11:52

0:11:52

0:52:00

0:52:00