filmov

tv

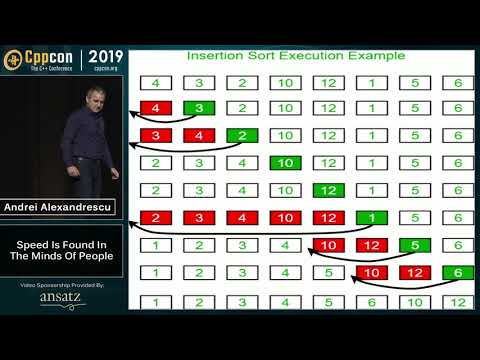

Sorting Algorithms: Speed Is Found In The Minds of People - Andrei Alexandrescu - CppCon 2019

Показать описание

Sorting Algorithms: Speed Is Found In The Minds of People

In all likelihood, sorting is one of the most researched classes of algorithms. It is a fundamental task in Computer Science, both on its own and as a step in other algorithms. Efficient algorithms for sorting and searching are now taught in core undergraduate classes. Are they at their best, or is there more blood to squeeze from that stone? This talk will explore a few less known – but more allegro! – variants of classic sorting algorithms. And as they say, the road matters more than the destination. Along the way, we'll encounter many wondrous surprises and we'll learn how to cope with the puzzling behavior of modern complex architectures.

—

Andrei Alexandrescu

Andrei Alexandrescu is a researcher, software engineer, and author. He wrote three best-selling books on programming (Modern C++ Design, C++ Coding Standards, and The D Programming Language) and numerous articles and papers on wide-ranging topics from programming to language design to Machine Learning to Natural Language Processing. Andrei holds a PhD in Computer Science from the University of Washington and a BSc in Electrical Engineering from University "Politehnica" Bucharest. He is the Vice President of the D Language Foundation.

—

*-----*

*-----*

Комментарии

1:29:55

1:29:55

0:01:33

0:01:33

0:05:50

0:05:50

0:08:24

0:08:24

0:10:38

0:10:38

0:02:08

0:02:08

0:00:38

0:00:38

0:00:40

0:00:40

0:17:41

0:17:41

0:04:50

0:04:50

0:00:49

0:00:49

0:10:48

0:10:48

0:09:01

0:09:01

0:00:39

0:00:39

0:44:36

0:44:36

0:09:41

0:09:41

0:00:20

0:00:20

0:26:55

0:26:55

0:00:54

0:00:54

0:00:39

0:00:39

0:21:44

0:21:44

0:04:26

0:04:26

0:05:33

0:05:33

0:00:11

0:00:11