filmov

tv

Apache Spark Internals: RDDs, Pipelining, Narrow & Wide Dependencies

Показать описание

In this video we'll understand Apache Spark's most fundamental abstraction layer: RDDs. Understanding this is essential for writing performant Spark code and comprehending what's going on during an execution.

00:00 Introduction

01:11 Traits of RDDs

04:34 Code Interface of RDDs

06:44 Understanding transformations

08:20 The DAG - directed acyclic graph

11:38 Types of dependencies

15:26 Optimization: Pipelining

17:47 Implementation of transformations

19:58 Summary

00:00 Introduction

01:11 Traits of RDDs

04:34 Code Interface of RDDs

06:44 Understanding transformations

08:20 The DAG - directed acyclic graph

11:38 Types of dependencies

15:26 Optimization: Pipelining

17:47 Implementation of transformations

19:58 Summary

Apache Spark Internals: RDDs, Pipelining, Narrow & Wide Dependencies

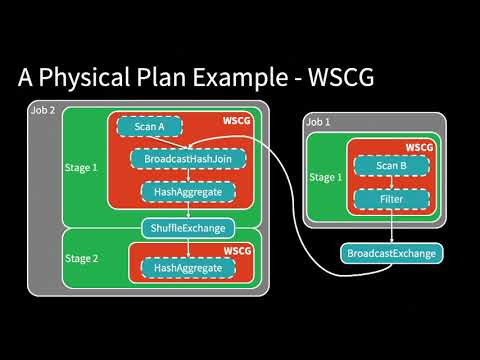

Apache Spark Internals: Understanding Physical Planning (Stages, Tasks & Pipelining)

Learn Apache Spark in 10 Minutes | Step by Step Guide

DataXDay - EN -The internals of query execution in Spark SQL

Introduction to AmpLab Spark Internals

Spark Internals and Architecture in Azure Databricks

Apache Spark Internals: Task Scheduling - Execution of a Physical Plan

A Deep Dive into Query Execution Engine of Spark SQL - Maryann Xue

Lessons Learned Developing and Managing Massive 300TB+ Apache Spark Pipelines

RDDs, DataFrames and Datasets in Apache Spark - NE Scala 2016

Apache Spark Internals - The Internals Of Apache Spark Execution

A Tale of Three Apache Spark APIs: RDDs, DataFrames, and Datasets - Jules Damji

Building a Versatile Analytics Pipeline on Top of Apache Spark - Mikhail Chernetsov

Apache Spark RDD introduction, narrow dependency, wide dependency, Pipe line concepts introduction

A Deeper Understanding of Spark Internals - Aaron Davidson (Databricks)

Building a Unified 'Big Data' Pipeline in Apache Spark by Aaron Davidson at ScalaMatsuri20...

Internals of Speeding up PySpark with Arrow - Ruben Berenguel (Consultant)

From Pipelines to Refineries: Building Complex Data Applications with Apache Spark - Tim Hunter

Tuning and Debugging Apache Spark

Apache Spark as a Platform for Powerful Custom Analytics Data Pipeline: Talk by Mikhail Chernetsov

Demystifying DataFrame and Dataset - Dr. Kazuaki Ishizaki

Building Machine Learning Algorithms on Apache Spark - William Benton

Extending Spark Machine Learning Beyond Linear Regression by Holden Karau

Building a Unified Data Pipeline with Apache Spark and XGBoost with Nan Zhu

Комментарии

0:21:14

0:21:14

0:07:28

0:07:28

0:10:47

0:10:47

0:53:39

0:53:39

1:14:12

1:14:12

0:13:05

0:13:05

0:09:44

0:09:44

0:39:32

0:39:32

0:23:39

0:23:39

0:48:05

0:48:05

0:10:17

0:10:17

0:31:19

0:31:19

0:31:00

0:31:00

0:40:49

0:40:49

0:44:03

0:44:03

0:38:31

0:38:31

0:32:27

0:32:27

0:29:27

0:29:27

0:47:14

0:47:14

0:49:38

0:49:38

0:25:42

0:25:42

0:27:58

0:27:58

0:32:04

0:32:04

0:29:56

0:29:56