filmov

tv

Apache Spark Internals: Understanding Physical Planning (Stages, Tasks & Pipelining)

Показать описание

Let's explore how a logical plan is transformed into a physical plan in Apache Spark. The logical plan consists of RDDs, Dependencies and Partitions - it's our DAG. To schedule and execute this on a cluster, we need to transform this into a physical plan, comprised of Stages and Tasks. The Spark scheduler know how to schedule tasks on our workers (much similar as we saw in MapReduce).

Let's go on a journey and explore how Apache Spark works internally.

It will help us write much better code.

00:00 Intro

00:36 Recap: Logical plan, DAG, Dependencies

01:39 Transforming the Logical Plan into a Physical Plan

03:26 Pipelining: The key optimization

05:22 Shuffles & The Relation to MapReduce

06:52 Summary & Outro

Let's go on a journey and explore how Apache Spark works internally.

It will help us write much better code.

00:00 Intro

00:36 Recap: Logical plan, DAG, Dependencies

01:39 Transforming the Logical Plan into a Physical Plan

03:26 Pipelining: The key optimization

05:22 Shuffles & The Relation to MapReduce

06:52 Summary & Outro

Apache Spark Internals: Understanding Physical Planning (Stages, Tasks & Pipelining)

Apache Spark Internals: Task Scheduling - Execution of a Physical Plan

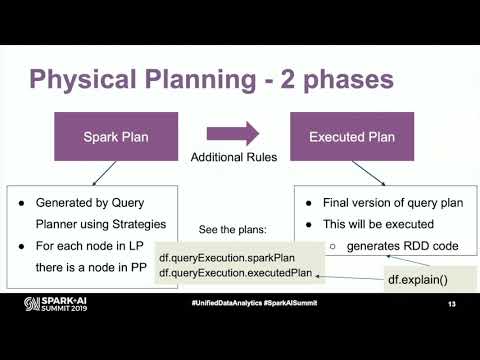

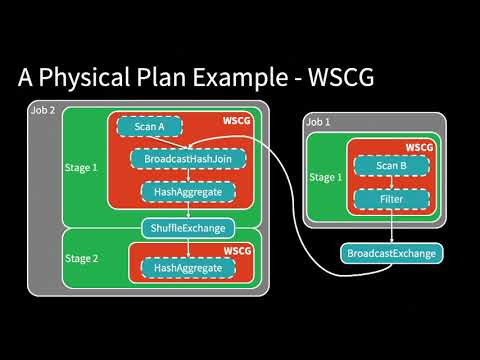

Physical Plans in Spark SQL - David Vrba (Socialbakers)

Apache Spark - Spark Internals | Spark Execution Plan With Example | Spark Tutorial

Spark Logical & Physical Plan

Apache Spark Architecture | Spark Cluster Architecture Explained | Spark Training | Edureka

Master Reading Spark Query Plans

Apache Spark Internals: RDDs, Pipelining, Narrow & Wide Dependencies

Apache Spark Architecture - EXPLAINED!

04 Spark DataFrames & Execution Plans

Tuning and Debugging Apache Spark

A Deep Dive into Query Execution Engine of Spark SQL - Maryann Xue

The Internals of Stateful Stream Processing in Spark Structured Streaming -Jacek Laskowski

DataXDay - EN -The internals of query execution in Spark SQL

Physical Plans in Spark SQL—continues - David Vrba (Socialbakers)

Spark Execution Model | Spark Tutorial | Interview Questions

Scaling TB's of data with Apache Spark & Scala DSL at Production- Chetan Khatri-FOSSASIA 20...

A Deep Dive into the Catalyst Optimizer (Herman van Hovell)

Understanding Spark Execution

Improving Apache Spark's Reliability with DataSourceV2 - Ryan Blue

Advancing Spark - Understanding the Spark UI

Spark Data Frame Internals | Map Reduce Vs Spark RDD vs Spark Dataframe | Look inside the Dataframe

Lessons from the Field:Applying Best Practices to Your Apache Spark Applications with Silvio Fiorito

Understanding the Working of Apache Spark's Catalyst Optimizer in Improving the Query Performan...

Комментарии

0:07:28

0:07:28

0:09:44

0:09:44

0:37:43

0:37:43

0:14:33

0:14:33

0:08:24

0:08:24

0:21:17

0:21:17

0:39:19

0:39:19

0:21:14

0:21:14

1:15:10

1:15:10

0:03:58

0:03:58

0:47:14

0:47:14

0:39:32

0:39:32

0:41:39

0:41:39

0:53:39

0:53:39

0:39:19

0:39:19

0:13:36

0:13:36

0:47:08

0:47:08

0:31:36

0:31:36

0:15:33

0:15:33

0:39:17

0:39:17

0:30:19

0:30:19

0:47:26

0:47:26

0:36:17

0:36:17

0:00:50

0:00:50