filmov

tv

How to implement PCA (Principal Component Analysis) from scratch with Python

Показать описание

In the 7th lesson of the Machine Learning from Scratch course, we will learn how to implement the PCA (Principal Component Analysis) algorithm.

Welcome to the Machine Learning from Scratch course by AssemblyAI.

Thanks to libraries like Scikit-learn we can use most ML algorithms with a couple of lines of code. But knowing how these algorithms work inside is very important. Implementing them hands-on is a great way to achieve this.

And mostly, they are easier than you’d think to implement.

In this course, we will learn how to implement these 10 algorithms.

We will quickly go through how the algorithms work and then implement them in Python using the help of NumPy.

▬▬▬▬▬▬▬▬▬▬▬▬ CONNECT ▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#MachineLearning #DeepLearning

Welcome to the Machine Learning from Scratch course by AssemblyAI.

Thanks to libraries like Scikit-learn we can use most ML algorithms with a couple of lines of code. But knowing how these algorithms work inside is very important. Implementing them hands-on is a great way to achieve this.

And mostly, they are easier than you’d think to implement.

In this course, we will learn how to implement these 10 algorithms.

We will quickly go through how the algorithms work and then implement them in Python using the help of NumPy.

▬▬▬▬▬▬▬▬▬▬▬▬ CONNECT ▬▬▬▬▬▬▬▬▬▬▬▬

▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬▬

#MachineLearning #DeepLearning

How to implement PCA (Principal Component Analysis) from scratch with Python

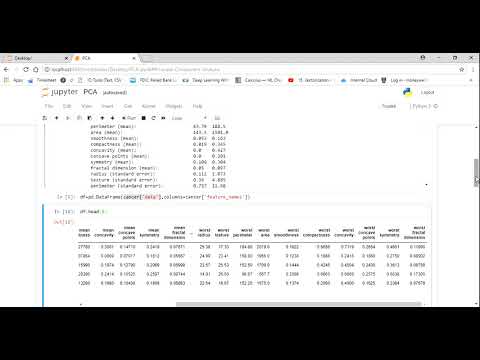

Machine Learning Tutorial Python - 19: Principal Component Analysis (PCA) with Python Code

StatQuest: PCA main ideas in only 5 minutes!!!

StatQuest: Principal Component Analysis (PCA), Step-by-Step

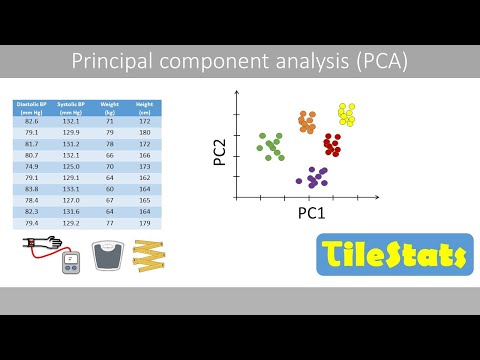

Principal Component Analysis (PCA)

Principal Component Analysis (PCA) Explained: Simplify Complex Data for Machine Learning

Principal Component Analysis (PCA) - easy and practical explanation

Principle Component Analysis (PCA) using sklearn and python

PCA (Principal Component Analysis) in Python - Machine Learning From Scratch 11 - Python Tutorial

Principal Component Analysis (PCA)

StatQuest: PCA in Python

Basics of PCA (Principal Component Analysis) : Data Science Concepts

Principal component analysis step by step | PCA explained step by step | PCA in statistics

When to Use PCA

Principal Component Analysis in R Programming | How to Apply PCA | Step-by-Step Tutorial & Examp...

Principal Component Analysis in Python | How to Apply PCA | Scree Plot, Biplot, Elbow & Kaisers ...

Principal Component Analysis (PCA) 1 [Python]

Python PCA Tutorial: Image Classification using Principal Component Analysis

PCA In Machine Learning | Principal Component Analysis | Machine Learning Tutorial | Simplilearn

PCA : the math - step-by-step with a simple example

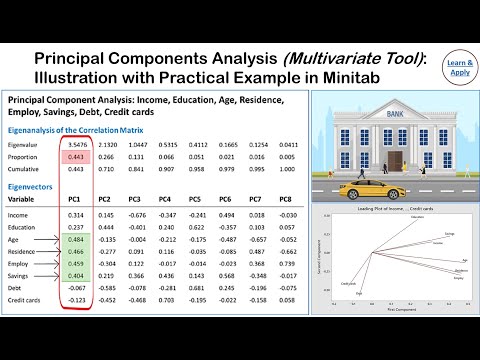

Principal Component Analysis (PCA): With Practical Example in Minitab

Principal Component Analysis (PCA) using Python (Scikit-learn)

Machine Learning in Python: Principal Component Analysis (PCA) for Handling High-Dimensional Data

PCA Indepth Geometric And Mathematical InDepth Intuition ML Algorithms

Комментарии

0:12:16

0:12:16

0:24:09

0:24:09

0:06:05

0:06:05

0:21:58

0:21:58

0:06:28

0:06:28

0:08:49

0:08:49

0:10:56

0:10:56

0:12:30

0:12:30

0:17:17

0:17:17

0:13:46

0:13:46

0:11:37

0:11:37

0:06:01

0:06:01

0:28:06

0:28:06

0:03:05

0:03:05

0:10:29

0:10:29

0:20:01

0:20:01

0:07:37

0:07:37

0:27:58

0:27:58

0:31:10

0:31:10

0:20:22

0:20:22

0:09:36

0:09:36

0:19:56

0:19:56

0:21:04

0:21:04

1:28:31

1:28:31