filmov

tv

Implementing Randomized Matrix Algorithms in Parallel and Distributed Environments, Michael Mahoney

Показать описание

Motivated by problems in large-scale data analysis, random-ized algorithms for matrix problems such as regression and low-rank matrix approximation have been the focus of a great deal of attention in recent years. These algorithms exploit novel random sampling and random projection meth-ods; and implementations of these algorithms have already proven superior to traditional state-of-the-art algorithms, as implemented in Lapack and high-quality scientific comput-ing software, for moderately-large problems stored in RAM on a single machine. Here, we describe the extension of these methods to computing high-precision solutions in par-allel and distributed environments that are more common in very large-scale data analysis applications.

In particular, we consider both the Least Squares Approx-imation problem and the Least Absolute Deviation prob-lem, and we develop and implement randomized algorithms that take advantage of modern computer architectures in order to achieve improved communication profiles. Our iterative least-squares solver, LSRN, is competitive with state-of-the-art implementations on moderately-large prob-lems; and, when coupled with the Chebyshev semi-iterative method, scales well for solving large problems on clusters that have high communication costs such as on an Amazon Elastic Compute Cloud cluster. Our iterative least-absolute-deviations solver is based on fast ellipsoidal rounding, ran-dom sampling, and interior-point cutting-plane methods; and we demonstrate significant improvements over tradi-tional algorithms on MapReduce. In addition, this algorithm can also be extended to solve more general convex problems on MapReduce.

In particular, we consider both the Least Squares Approx-imation problem and the Least Absolute Deviation prob-lem, and we develop and implement randomized algorithms that take advantage of modern computer architectures in order to achieve improved communication profiles. Our iterative least-squares solver, LSRN, is competitive with state-of-the-art implementations on moderately-large prob-lems; and, when coupled with the Chebyshev semi-iterative method, scales well for solving large problems on clusters that have high communication costs such as on an Amazon Elastic Compute Cloud cluster. Our iterative least-absolute-deviations solver is based on fast ellipsoidal rounding, ran-dom sampling, and interior-point cutting-plane methods; and we demonstrate significant improvements over tradi-tional algorithms on MapReduce. In addition, this algorithm can also be extended to solve more general convex problems on MapReduce.

0:30:05

0:30:05

0:59:25

0:59:25

1:51:05

1:51:05

0:34:57

0:34:57

0:13:00

0:13:00

1:21:52

1:21:52

0:33:41

0:33:41

1:12:33

1:12:33

0:45:58

0:45:58

1:30:03

1:30:03

0:29:56

0:29:56

0:08:01

0:08:01

0:00:24

0:00:24

1:39:38

1:39:38

1:31:37

1:31:37

0:55:46

0:55:46

0:52:24

0:52:24

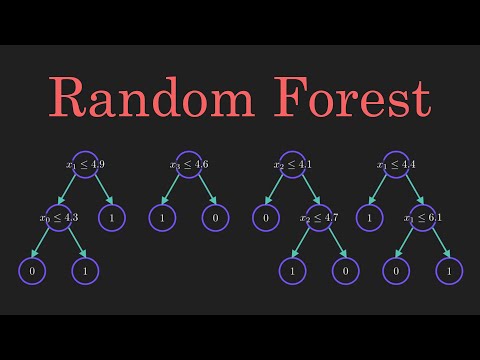

![[MXML-8-06] Random Forest](https://i.ytimg.com/vi/ps2QXPnPHVM/hqdefault.jpg) 0:15:29

0:15:29

0:23:15

0:23:15

0:00:39

0:00:39

0:18:11

0:18:11

0:13:43

0:13:43

0:33:26

0:33:26

![[MXML-8-04] Random Forest](https://i.ytimg.com/vi/d5rsEkMQ7ck/hqdefault.jpg) 0:15:42

0:15:42