filmov

tv

Advancing Spark - Setting up Databricks Unity Catalog Environments

Показать описание

Unity Catalog is a huge part of Databrick's platform, if you're currently using Databricks but you don't have UC enabled, you're going to be missing out on some pretty huge features in the future! But where do you start? Do you really need a Global AD Admin, and how what do they have to do? How do you manage dev, test and prod if they all have to share the same metastore?!?

In this video Simon takes two existing databricks workspaces and builds out Unity Catalog from scratch, provisioning account console access, creating a metastore, allocating access for the managed identity and locking down catalogs to their respective workspaces. Want to get started with UC, check this video out!

In this video Simon takes two existing databricks workspaces and builds out Unity Catalog from scratch, provisioning account console access, creating a metastore, allocating access for the managed identity and locking down catalogs to their respective workspaces. Want to get started with UC, check this video out!

Advancing Spark - Setting up Databricks Unity Catalog Environments

Advancing Spark - Learning Databricks with DBDemos

Advancing Spark - Rethinking ETL with Databricks Autoloader

Advancing Spark - Getting started with Repos for Databricks

Advancing Spark - Managing Files with Unity Catalog Volumes

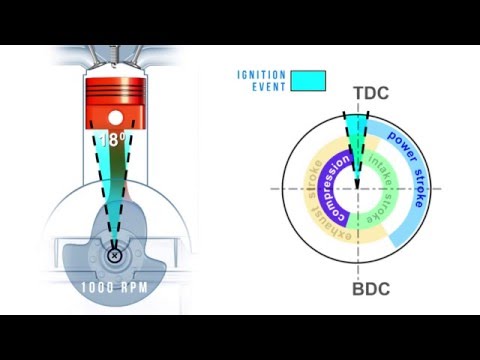

How Ignition Timing Works: Vacuum and Mechanical Advance Explained!

IGNITION TIMING SIMPLIFIED | The secrets of spark tuning revealed

Spark Timing & Dwell Control Training Module Trailer

Advanced Camera 1/16

Advancing Spark - Local Development with Databricks Connect V2

Advancing Spark - Introduction to Databricks SQL Analytics

Advancing Spark - Production-Grade Streaming in Databricks

Advancing Spark - External Tables with Unity Catalog

Advancing Spark - Azure Databricks News April 2023

Advancing Spark - Understanding the Spark UI

Gen 4 Timing Tuning, Dialing In The Spark Advance For Max Power!

Advancing Spark - Getting Started with Databricks AutoML

Will AI Replace Data Engineering? - Advancing Spark

Advancing Spark - Crazy Performance with Spark 3 Adaptive Query Execution

Cluster Configuration in Apache Spark | Thumb rule fo optimal performance #interview #question

Dynamic Databricks Workflows - Advancing Spark

Advancing Spark - Provisioning Databricks Users through SCIM

How To Set Timing Ignition Timing With A Distributor

Advancing Spark - Autoloader Resource Management

Комментарии

0:21:21

0:21:21

0:14:07

0:14:07

0:21:09

0:21:09

0:15:16

0:15:16

0:12:21

0:12:21

0:06:18

0:06:18

0:05:24

0:05:24

0:01:53

0:01:53

0:58:02

0:58:02

0:22:42

0:22:42

0:25:39

0:25:39

0:34:05

0:34:05

0:17:25

0:17:25

0:23:03

0:23:03

0:30:19

0:30:19

0:21:57

0:21:57

0:20:27

0:20:27

0:25:38

0:25:38

0:18:48

0:18:48

0:01:00

0:01:00

0:21:56

0:21:56

0:14:23

0:14:23

0:07:03

0:07:03

0:22:11

0:22:11