filmov

tv

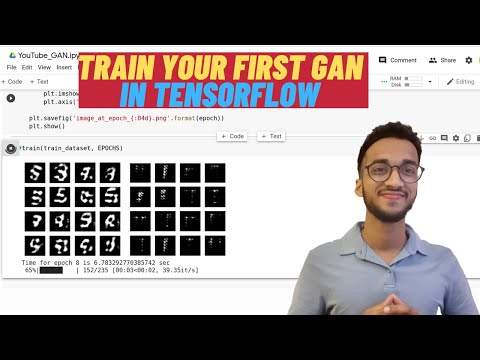

Building our first simple GAN

Показать описание

In this video we build a simple generative adversarial network based on fully connected layers and train it on the MNIST dataset. It's far from perfect, but it's a start and will lead us to implement more advanced and better architectures in upcoming videos.

❤️ Support the channel ❤️

Paid Courses I recommend for learning (affiliate links, no extra cost for you):

✨ Free Resources that are great:

💻 My Deep Learning Setup and Recording Setup:

GitHub Repository:

✅ One-Time Donations:

▶️ You Can Connect with me on:

OUTLINE:

0:00 - Introduction

0:29 - Building Discriminator

2:14 - Building Generator

4:36 - Hyperparameters, initializations, and preprocessing

10:14 - Setup training of GANs

22:09 - Training and evaluation

❤️ Support the channel ❤️

Paid Courses I recommend for learning (affiliate links, no extra cost for you):

✨ Free Resources that are great:

💻 My Deep Learning Setup and Recording Setup:

GitHub Repository:

✅ One-Time Donations:

▶️ You Can Connect with me on:

OUTLINE:

0:00 - Introduction

0:29 - Building Discriminator

2:14 - Building Generator

4:36 - Hyperparameters, initializations, and preprocessing

10:14 - Setup training of GANs

22:09 - Training and evaluation

Building our first simple GAN

Building a GAN From Scratch With PyTorch | Theory + Implementation

Build a Generative Adversarial Neural Network with Tensorflow and Python | Deep Learning Projects

Train Your First GAN in Tensorflow| Complete Tutorial in Python|

Build GAN from scratch | end to end project

GAN to Generate Tabular Data: Python Project Simply Explained Without Any Image Data

Two Straws Windmill for Kids #diycrafts #kidscrafts #strawprojects #diy

Learn How to Spring the Cards like a PRO😮 #shorts #tutorial

Ukulele Beginners! Learn this Chord Progression FIRST 😎 #ukeguide

【Minecraft MOD】Carry On MOD

amazing inovation 😯💥 / robotics #robot science project

make your first gan with pytorch pdf

Swedish Submachine Gun (SMG) | Carl Gustaf M/45 | How It Works

Built an AWESOME MINECRAFT Controller

How A Taser Works 😱

Switching to your pistol is faster than reloading | @theleveractionkid

Why you SHOULDN’T build a mobile app 📱👩💻 #technology #programming #software #career #tech...

3 year transformation - concealed carry draw

Minecraft vs craftsman downloader #shorts #minecraft #craftsman

How to Make a Robotic Arm from Cardboard #shorts #lifehacks

Can You spot the Fake Rubik’s Cube? 🤔

co-ordinate Kaise on Karen #minecraft

NERF HEAVY WEAPON GUYS

India 🇮🇳Flag With National Anthem 🇮🇳 #shorts #trending #viral #shorts

Комментарии

0:24:24

0:24:24

0:31:59

0:31:59

2:01:24

2:01:24

0:20:37

0:20:37

0:32:31

0:32:31

0:09:33

0:09:33

0:00:20

0:00:20

0:00:19

0:00:19

0:00:27

0:00:27

0:00:10

0:00:10

0:00:15

0:00:15

0:03:24

0:03:24

0:00:30

0:00:30

0:00:24

0:00:24

0:00:30

0:00:30

0:00:19

0:00:19

0:00:38

0:00:38

0:00:09

0:00:09

0:00:27

0:00:27

0:00:15

0:00:15

0:00:20

0:00:20

0:00:16

0:00:16

0:00:25

0:00:25

0:00:18

0:00:18