filmov

tv

Motion capture using 3D pose estimation with Unity and mediapipe

Показать описание

It can capture people motion from a video file and overlay it on a specified 3d model in real time (30fps and more). This demo is recorded using Unity recorder.

============================

Захват движения людей из видео и наложение его на трехмерные модели. Демо собрано на юнити с использование плагина MediaPipUnityPlugin

MoCap using Mediapipe

3D Pose Estimation Demo

'Baddie-IVE' Motion Capture Animation Workflow

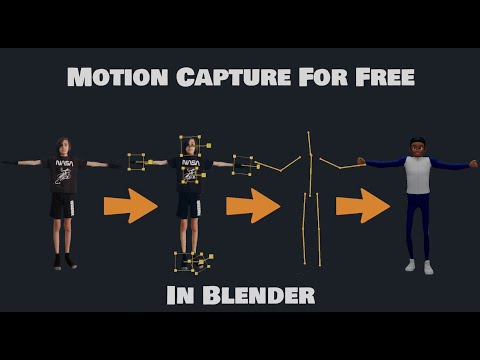

How to Animate in Blender - FREE Mocap Ai Tools!

Motion capture using 3D pose estimation with Unity and mediapipe

Why motion capture is harder than it looks

Real-time 3D pose estimation with Unity - Use USB camera

How To Do Motion Capture in Blender for Free

[MMD] QuickMagic (AI Motion Capture) [Test]

Character animation for impatient people - Blender Tutorial

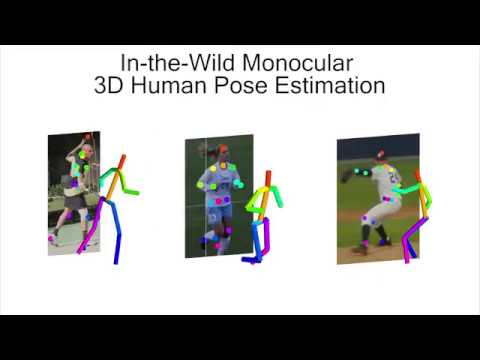

Monocular 3D Human Pose Estimation In The Wild Using Improved CNN Supervision - 3DV2017

Free AI MoCap that DOESN'T SUCK (Blender Tutorial)

FREE Motion Capture for EVERYONE! (No suit needed)

Free AI Mocap from Video! Plask & Blender Tutorial

3D Motion Capture using Normal Webcam | Computer Vision OpenCV

Free & Easy Mocap with Rokoko Video & Blender Tutorial

Only web Cam. Real time motion capture. - 3D pose estimation

Realtime Full-Body Tracking via Webcam! | Google MediaPipe Pose, Unity, Open Source

[ECCV 2022] HULC: 3D HUman Motion Capture with Pose Manifold Sampling and Dense Contact Guidance

Real-time 3D pose estimation for iOS with Unity.

8 Best Motion Capture Software

ANIMATE IMAGES with a sample video - Free - AI Motion Capture - Viggle AI Tutorial

THIS IS HUGE! Everyone can do MOTION TRACKING now!

Real-Time 3D Pose Detection & Pose Classification | Mediapipe | OpenCV | Python

Комментарии

0:00:15

0:00:15

0:00:11

0:00:11

0:00:26

0:00:26

0:08:24

0:08:24

0:00:57

0:00:57

0:08:36

0:08:36

0:00:53

0:00:53

0:02:11

0:02:11

![[MMD] QuickMagic (AI](https://i.ytimg.com/vi/NgwzPuPRHMs/hqdefault.jpg) 0:00:14

0:00:14

0:12:49

0:12:49

0:05:03

0:05:03

0:01:42

0:01:42

0:06:07

0:06:07

0:05:22

0:05:22

1:07:29

1:07:29

0:03:00

0:03:00

0:01:00

0:01:00

0:01:54

0:01:54

![[ECCV 2022] HULC:](https://i.ytimg.com/vi/3hkfMg6T0lE/hqdefault.jpg) 0:07:38

0:07:38

0:00:30

0:00:30

0:11:25

0:11:25

0:03:49

0:03:49

0:07:29

0:07:29

0:43:48

0:43:48