filmov

tv

Distributed Caching

Показать описание

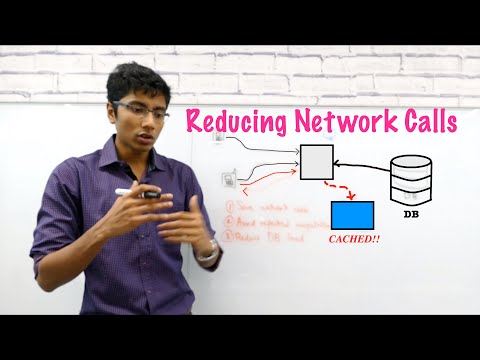

Distributed caching is a technique that involves storing frequently accessed data in memory across multiple nodes or servers in a distributed system. Instead of fetching data from a remote data source, the cached data can be quickly retrieved from the in-memory distributed cache, significantly reducing latency.

Some of the key benefits of using distributed caching includes:

1- Improved performance - Caching reduces the need to fetch data from remote sources, leading to lower latency and faster response times. Frequently accessed data is thus readily available in the cache, eliminating the need for expensive computations or database queries.

2- Enhanced scalability - Caching allows systems to scale horizontally by adding more cache nodes as the load increases, thus accommodating increase in user base or growing datasets.

3- Increased reliability - Caching enhances system reliability by reducing dependencies on external services. Even if the data source or backend systems experience issues, the cached data remains available, ensuring uninterrupted access for users. Additionally, distributed caching provides fault tolerance, as the cache can continue serving data even if individual cache nodes fail.

00:00 Introduction

00:03 Welcome

00:50 Overview

01:14 Example usage

02:21 Key benefits

03:30 Key components

04:22 Handling data updates

04:55 Cache invalidation

05:23 Write-through

05:46 Write-behind

06:06 Write-event

06:36 Mostly reads

07:12 AWS vs Azure services

08:29 What's next...

INSTRUCTOR BIO:

Some of the key benefits of using distributed caching includes:

1- Improved performance - Caching reduces the need to fetch data from remote sources, leading to lower latency and faster response times. Frequently accessed data is thus readily available in the cache, eliminating the need for expensive computations or database queries.

2- Enhanced scalability - Caching allows systems to scale horizontally by adding more cache nodes as the load increases, thus accommodating increase in user base or growing datasets.

3- Increased reliability - Caching enhances system reliability by reducing dependencies on external services. Even if the data source or backend systems experience issues, the cached data remains available, ensuring uninterrupted access for users. Additionally, distributed caching provides fault tolerance, as the cache can continue serving data even if individual cache nodes fail.

00:00 Introduction

00:03 Welcome

00:50 Overview

01:14 Example usage

02:21 Key benefits

03:30 Key components

04:22 Handling data updates

04:55 Cache invalidation

05:23 Write-through

05:46 Write-behind

06:06 Write-event

06:36 Mostly reads

07:12 AWS vs Azure services

08:29 What's next...

INSTRUCTOR BIO:

0:13:29

0:13:29

0:34:34

0:34:34

0:07:04

0:07:04

0:05:05

0:05:05

0:05:48

0:05:48

0:20:03

0:20:03

0:10:55

0:10:55

0:11:25

0:11:25

0:00:26

0:00:26

0:37:41

0:37:41

0:04:00

0:04:00

0:01:01

0:01:01

0:06:28

0:06:28

0:18:19

0:18:19

0:34:10

0:34:10

0:15:21

0:15:21

0:01:17

0:01:17

0:00:58

0:00:58

1:42:35

1:42:35

0:06:41

0:06:41

0:09:35

0:09:35

0:06:22

0:06:22

0:33:40

0:33:40

0:09:58

0:09:58