filmov

tv

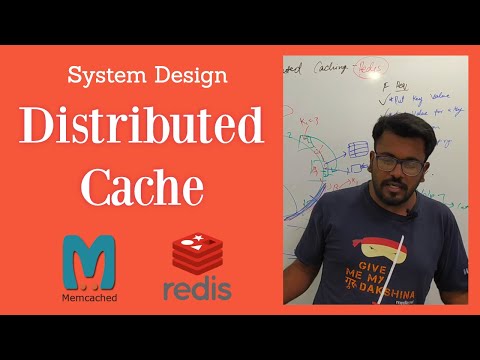

Redis system design | Distributed cache System design

Показать описание

This video explains how to design distributed cache system like Redis/Memcache

This is one of the famous Amazon interview question.

How to distribute nodes?

Answer: using consistent hashing.

Apart from LRU you can use Countmin sketch.

to calculate frequency of key accessed use Countmin sketch

----------------------------------------------------------------------------------------------------------------------------------------------------------

LRU Code by Varun Vats:

This is one of the famous Amazon interview question.

How to distribute nodes?

Answer: using consistent hashing.

Apart from LRU you can use Countmin sketch.

to calculate frequency of key accessed use Countmin sketch

----------------------------------------------------------------------------------------------------------------------------------------------------------

LRU Code by Varun Vats:

Redis system design | Distributed cache System design

System Design Interview - Distributed Cache

How Distributed Lock works | ft Redis | System Design

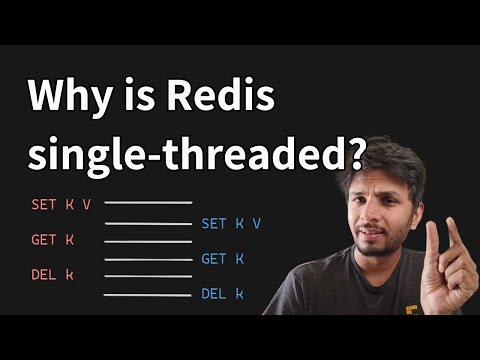

System Design: Why is single-threaded Redis so fast?

What are Distributed CACHES and how do they manage DATA CONSISTENCY?

Top 5 Redis Use Cases

Cache Systems Every Developer Should Know

Building Highly Concurrent, Low Latency Gaming System with Redis

Top 7 Most-Used Distributed System Patterns

Distributed Locks | System design basics

Redis vs. Memcached - Who Wins? | Systems Design Interview 0 to 1 With Ex-Google SWE

2. What Makes Redis Special? | Redis Internals

Redis + Microservices Architecture in 60 Seconds

System Design - Building Distributed Cache like Redis, Memcached | System Design Interview Question

Distributed Cache System Design - Redis or Memcached

System Design : Distributed Database System Key Value Store

System Design Interview - Rate Limiting (local and distributed)

Google SWE teaches systems design | EP26: Redis and Memcached Explained (While Drunk?)

Why is Redis so FAST #javascript #python #web #coding #programming

L15: Distributed System Design Example (Unique ID)

Top Redis Use Cases #javascript #python #web #coding #programming

Redis System design - 1

System Design for Flash Sales: Sharding vs. Zookeeper vs. Redis vs. Kafka

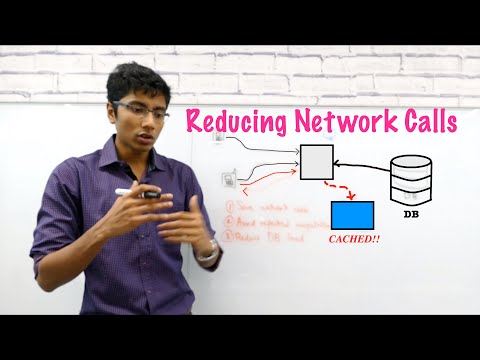

Caching Architectures | Microservices Caching Patterns | System Design Primer | Tech Primers

Комментарии

0:34:10

0:34:10

0:34:34

0:34:34

0:10:24

0:10:24

0:03:39

0:03:39

0:13:29

0:13:29

0:06:28

0:06:28

0:05:48

0:05:48

0:21:38

0:21:38

0:06:14

0:06:14

0:28:50

0:28:50

0:08:24

0:08:24

0:22:04

0:22:04

0:01:32

0:01:32

0:27:28

0:27:28

0:08:31

0:08:31

0:40:55

0:40:55

0:34:36

0:34:36

0:08:50

0:08:50

0:00:48

0:00:48

0:12:51

0:12:51

0:00:50

0:00:50

0:00:44

0:00:44

0:30:49

0:30:49

0:13:25

0:13:25