filmov

tv

Caching in distributed systems: A friendly introduction

Показать описание

Caching is an amazingly effective technique to reduce latency. It helps build scalable, distributed systems.

We first discuss what is a cache and why we use it. We then talk about the key features of a cache in a distributed system.

Cache management is important because of its relation to cache hit ratios and performance. We talk about various scenarios in a distributed environment.

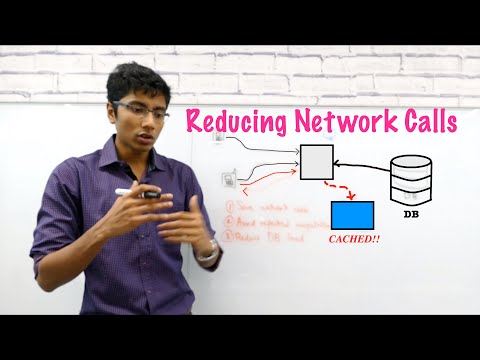

Benefits of a cache:

1. Saves network calls

2. Avoids repeated computations

3. Reduces DB load

Drawbacks of a cache:

1. Can be expensive to host

2. Potential thrashing

3. Eventual consistency

Cache Write Policies:

1. Write-through

2. Write-back

3. Write-around

Cache Replacement Policies:

1. LRU

2. LFU

3. Segmented LRU

00:00 What is a cache?

00:20 Caching use cases

03:42 Caching limitations

06:33 Drawbacks

09:42 Cache Placement

Caching resources listed together (click on the resources tab):

You can follow me on:

#Caching #DistributedSystems #SystemDesign

We first discuss what is a cache and why we use it. We then talk about the key features of a cache in a distributed system.

Cache management is important because of its relation to cache hit ratios and performance. We talk about various scenarios in a distributed environment.

Benefits of a cache:

1. Saves network calls

2. Avoids repeated computations

3. Reduces DB load

Drawbacks of a cache:

1. Can be expensive to host

2. Potential thrashing

3. Eventual consistency

Cache Write Policies:

1. Write-through

2. Write-back

3. Write-around

Cache Replacement Policies:

1. LRU

2. LFU

3. Segmented LRU

00:00 What is a cache?

00:20 Caching use cases

03:42 Caching limitations

06:33 Drawbacks

09:42 Cache Placement

Caching resources listed together (click on the resources tab):

You can follow me on:

#Caching #DistributedSystems #SystemDesign

Caching in distributed systems: A friendly introduction

Cache Systems Every Developer Should Know

What are Distributed CACHES and how do they manage DATA CONSISTENCY?

Distributed Caching for System Design Interviews

19. System Design: Distributed Cache and Caching Strategies | Cache-Aside, Write-Through, Write-Back

System Design Interview - Distributed Cache

Caching Pitfalls Every Developer Should Know

Distributed Cache explained - Software Architecture Introduction (part 3)

What is Redis? ✅ #backend #caching

Introduction to Distributed Caching - Systems Design Interview 0 to 1 with Ex-Google SWE

How does Caching on the Backend work? (System Design Fundamentals)

What is In-Memory caching & Distributed Caching?

Cache Concepts: Intro to Caching

Caching Strategies in Distributed Systems

System Design: What is a Cache?

Scalability - Caching and caching strategies - Part 1

Distributed Systems: Caching

Caching Architectures | Microservices Caching Patterns | System Design Primer | Tech Primers

Caching Simplified for Beginners 2023 | Introduction to Caching | System Design Fundamentals

Google SWE teaches systems design | EP25: Distributed Caching Primer

Cache Memory In Distributed System | 10 Min System Design #SystemDesign #Caching #DistributedSystems

Caching for Scalable #systemdesign

Types of caching

Caching Concept | System Design

Комментарии

0:11:25

0:11:25

0:05:48

0:05:48

0:13:29

0:13:29

0:07:04

0:07:04

0:37:41

0:37:41

0:34:34

0:34:34

0:06:41

0:06:41

0:04:00

0:04:00

0:01:01

0:01:01

0:10:55

0:10:55

0:22:45

0:22:45

0:05:05

0:05:05

0:01:38

0:01:38

0:15:24

0:15:24

0:06:04

0:06:04

0:17:23

0:17:23

0:10:58

0:10:58

0:13:25

0:13:25

0:10:38

0:10:38

0:15:21

0:15:21

0:06:51

0:06:51

0:00:55

0:00:55

0:00:48

0:00:48

0:00:18

0:00:18