filmov

tv

Trade-off between world modeling (predicting) vs agent modeling (acting)

Показать описание

Predicting vs. Acting: A Trade-off Between World Modeling & Agent Modeling

Support my learning journey either by clicking the Join button above, becoming a Patreon member, or a one-time Venmo!

Discuss this stuff with other Tunadorks on Discord

All my other links

Support my learning journey either by clicking the Join button above, becoming a Patreon member, or a one-time Venmo!

Discuss this stuff with other Tunadorks on Discord

All my other links

Trade-off between world modeling (predicting) vs agent modeling (acting)

Model Prediction Accuracy and Model Interpretability Trade Off

Prediction Accuracy and Model Interpretability trade off

Bias and Variance, Simplified

Reconciling modern machine learning and the bias-variance trade-off

Machine Learning Tutorial Python - 20: Bias vs Variance In Machine Learning

Bias and Variance for Machine Learning | Deep Learning

Santos Predictive Human Models - A Guide To Task-Focused Trade-Off Analysis

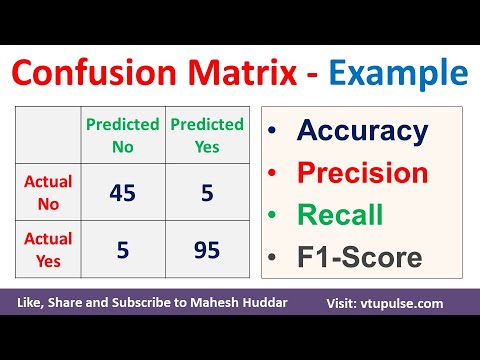

Confusion Matrix Solved Example Accuracy Precision Recall F1 Score Prevalence by Mahesh Huddar

Bias Variance Trade-off Easily Explained | Machine Learning Basics

Bias Variance Trade off | with Salary Prediction Example | bias variance decomposition | ML

Bias Variance Tradeoff | Machine Learning | Data Analytics

Interpretable vs Explainable Machine Learning

OM PriCon2020: The Trade-Offs of Private Prediction - Laurens van der Maaten and Awni Hannun

Inference vs. Prediction: An Overview

Bias Variance Tradeoff

Trading-Off Cost of Deployment Versus Accuracy for Predictive Models

Predict The Stock Market With Machine Learning And Python

8.3 Bias-Variance Decomposition of the Squared Error (L08: Model Evaluation Part 1)

Warren Buffet explains how one could've turned $114 into $400,000 by investing in S&P 500 i...

Bias-Variance In Machine Learning | Bias Variance Trade Off | Machine Learning Training | Edureka

Alberto Bemporad | Embedded Model Predictive Control

Amazon Data Scientist Mock Interview - Fraud Model

The ULTIMATE Supply & Demand Guide (My Secrets)

Комментарии

0:17:37

0:17:37

0:13:36

0:13:36

0:10:21

0:10:21

0:04:57

0:04:57

0:18:54

0:18:54

0:10:50

0:10:50

0:07:15

0:07:15

0:07:30

0:07:30

0:05:50

0:05:50

0:21:42

0:21:42

0:08:47

0:08:47

0:15:01

0:15:01

0:07:07

0:07:07

0:14:42

0:14:42

0:09:25

0:09:25

0:06:11

0:06:11

0:31:29

0:31:29

0:35:55

0:35:55

0:30:51

0:30:51

0:00:50

0:00:50

0:09:11

0:09:11

0:58:33

0:58:33

0:37:08

0:37:08

0:09:44

0:09:44