filmov

tv

Train Large, Then Compress

Показать описание

This video explains a new study on the best way to use a limited compute budget when training Natural Language Processing tasks. They show that Large models reach a lower error faster than smaller models and stopping training early with large models achieves better performance than longer training with smaller models. These larger models come with an inference bottleneck, it takes longer to make predictions and costs more to store these weights. The authors alleviate the inference bottleneck by showing that these larger models are robust to compression techniques like quantization and pruning! Thanks for watching, Please Subscribe!

Paper Links:

Paper Links:

Train Large, Then Compress

Rethinking Model Size: Train Large, Then Compress with Joseph Gonzalez - #378

Train Big, Compress Smart: New Secrets to Speedy AI

tinyML Talks local Germany Marcus Rueb: Introduction to optimization algorithms to compress neural..

How To INSTANTLY Freeze Water On Impact!

How to Compress Your BERT NLP Models For Very Efficient Inference

Ancient Technique To Burn Fat Fast

How to Stretch the Masseter Muscle - Trigger Point Therapy

How to Compress Your NLP Models for Efficient Inference

Compress Deep Learning models 10,000x with Probabilistic Hash Functions

How to Eliminate Microphone Feedback - As Fast As Possible

How to move a mattress | How to return mattress | How to compress a mattress using a vacuum bag

Ganglion Cyst of Wrist Diagnosis and Treatment Dr Vizniak

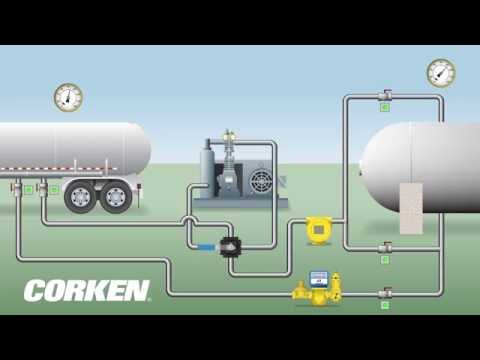

LPG Transport Unloading Application (Liquefied Gas Transfer & Vapor Recovery)

VPTQ - Extreme Low Bit LLM Quantization - Compress 405B, 70B Models

What Is Rib Flare? Watch To Learn How To Fix (Part 1 of 2)

SGD and Weight Decay Secretly Compress Your Neural Network

Gout Attack in the Big Toe Joint & Foot Diet & Treatment *2 MINUTES!*

Elbow Bursitis Treatment at Home - How to Treat Olecranon Bursitis

How to fire up the deepest core muscles (TVA)

Common Mistake in Surgical Drain Handling

How To Make Your Lungs Explode When Scuba Diving

How to Self Adjust Your Big Toe

The Science of Implosion | MythBusters

Комментарии

0:14:16

0:14:16

0:52:40

0:52:40

0:03:11

0:03:11

0:48:00

0:48:00

0:01:22

0:01:22

0:44:45

0:44:45

0:00:47

0:00:47

0:01:54

0:01:54

0:53:56

0:53:56

1:18:46

1:18:46

0:02:36

0:02:36

0:02:15

0:02:15

0:02:04

0:02:04

0:01:48

0:01:48

0:10:39

0:10:39

0:03:04

0:03:04

0:54:56

0:54:56

0:02:20

0:02:20

0:01:20

0:01:20

0:01:01

0:01:01

0:02:28

0:02:28

0:02:55

0:02:55

0:04:31

0:04:31

0:02:19

0:02:19