filmov

tv

R Stats: Multiple Regression - Variable Preparation

Показать описание

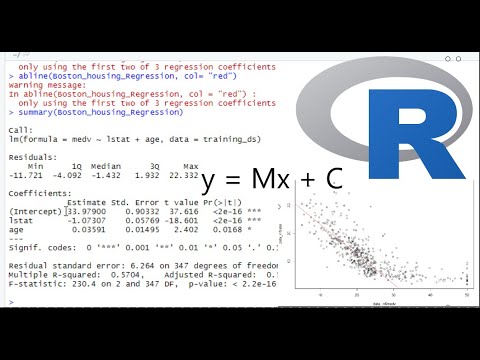

This video gives a quick overview of constructing a multiple regression model using R to estimate vehicles price based on their characteristics. The video focuses on how to prepare variables while employing a stepwise regression with backward elimination of variables. The lesson explains how to transform highly skewed variables (using Log10 transform) and later report their characteristics, how to check variable normality and their multiple collinearity (using Variance Inflation Factors) and their extreme values (using Cook's distance). The process will be guided by the measures of model quality, such as R-Squared and Adjusted R-Squared statistics, and variables' p-values, which represent the level of coefficient confidence. As always, the final model will be evaluated by calculating the correlation between the predicted and actual vehicle price for both the training and validation data sets, with correction for the previously transformed variables. The explanation will be quite informal and will avoid the more complex statistical concepts. Note that visual presentation and interpretation of multiple regression results will be explained in the next lesson.

The data for this lesson can be obtained from the well-known UCI Machine Learning archives:

The R source code for this video can be found here (some small discrepancies are possible):

The data for this lesson can be obtained from the well-known UCI Machine Learning archives:

The R source code for this video can be found here (some small discrepancies are possible):

Комментарии

0:07:43

0:07:43

0:05:19

0:05:19

0:07:43

0:07:43

0:10:46

0:10:46

0:05:25

0:05:25

0:09:26

0:09:26

0:04:05

0:04:05

0:20:48

0:20:48

1:14:06

1:14:06

0:09:57

0:09:57

0:13:12

0:13:12

0:08:11

0:08:11

0:09:48

0:09:48

0:05:25

0:05:25

0:11:01

0:11:01

0:05:01

0:05:01

0:33:49

0:33:49

0:08:06

0:08:06

0:22:05

0:22:05

0:12:47

0:12:47

0:25:28

0:25:28

0:28:40

0:28:40

0:06:02

0:06:02

0:22:13

0:22:13