filmov

tv

Neural networks [5.6] : Restricted Boltzmann machine - persistent CD

Показать описание

Neural networks [5.6] : Restricted Boltzmann machine - persistent CD

Neural networks [5.1] : Restricted Boltzmann machine - definition

Restricted Boltzmann Machines - Ep. 6 (Deep Learning SIMPLIFIED)

Neural networks [5.7] : Restricted Boltzmann machine - example

Neural networks [5.3] : Restricted Boltzmann machine - free energy

Restricted Boltzmann Machine | Neural Network Tutorial | Deep Learning Tutorial | Edureka

Neural networks [5.2] : Restricted Boltzmann machine - inference

Restricted Boltzmann Machines in 60 seconds!

Introduction to Boltzmann Machines

Neural networks [5.5] : Restricted Boltzmann machine - contrastive divergence (parameter update)

But what is a neural network? | Chapter 1, Deep learning

Neural Networks Part 6: Cross Entropy

Deep Learning with Tensorflow - Initializing a Restricted Boltzmann Machine

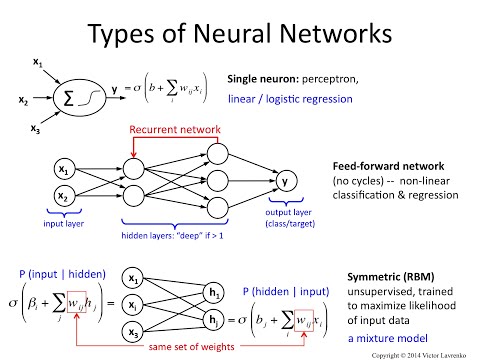

Neural Networks 5: feedforward, recurrent and RBM

Restricted Boltzmann Machines (RBM) - A friendly introduction

#117: Scikit-learn 113:Unsupervised Learning 17:Neural Network Models

What is Boltzmann Machines in Machine Learning?

Deep Learning Part - II (CS7015): Lec 18.3 Restricted Boltzmann Machines

The Boltzmann Machine – The Most Important Energy-Based Neural Network #shorts

Deep Learning with Tensorflow - RBMs and Autoencoders

Deep Learning using a Convolutional Neural Network (5/6): Transfer Learning Technique

Neural Network - Learning Rules 5 - Boltzmann Learning Rule

3 Deep Belief Networks

BOLTZMANN LEARNING

Комментарии

![Neural networks [5.6]](https://i.ytimg.com/vi/S0kFFiHzR8M/hqdefault.jpg) 0:07:36

0:07:36

![Neural networks [5.1]](https://i.ytimg.com/vi/p4Vh_zMw-HQ/hqdefault.jpg) 0:12:17

0:12:17

0:04:52

0:04:52

![Neural networks [5.7]](https://i.ytimg.com/vi/n26NdEtma8U/hqdefault.jpg) 0:08:15

0:08:15

![Neural networks [5.3]](https://i.ytimg.com/vi/e0Ts_7Y6hZU/hqdefault.jpg) 0:12:54

0:12:54

0:12:12

0:12:12

![Neural networks [5.2]](https://i.ytimg.com/vi/lekCh_i32iE/hqdefault.jpg) 0:18:32

0:18:32

0:01:07

0:01:07

0:08:56

0:08:56

![Neural networks [5.5]](https://i.ytimg.com/vi/wMb7cads0go/hqdefault.jpg) 0:11:10

0:11:10

0:18:40

0:18:40

0:09:31

0:09:31

0:05:18

0:05:18

0:04:56

0:04:56

0:36:58

0:36:58

0:09:15

0:09:15

0:03:00

0:03:00

0:16:10

0:16:10

0:00:58

0:00:58

0:05:04

0:05:04

1:19:57

1:19:57

0:06:36

0:06:36

0:07:20

0:07:20

0:05:14

0:05:14