filmov

tv

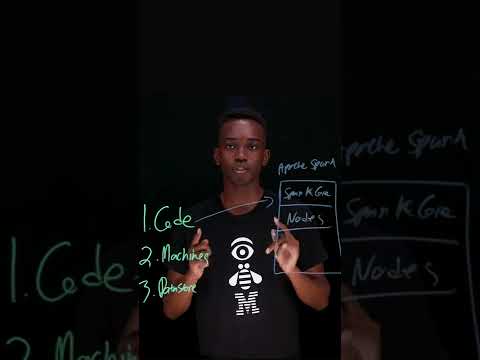

4 Recently asked Pyspark Coding Questions | Apache Spark Interview

Показать описание

I have trained over 20,000+ professionals in the field of Data Engineering in the last 5 years.

𝐖𝐚𝐧𝐭 𝐭𝐨 𝐌𝐚𝐬𝐭𝐞𝐫 𝐒𝐐𝐋? 𝐋𝐞𝐚𝐫𝐧 𝐒𝐐𝐋 𝐭𝐡𝐞 𝐫𝐢𝐠𝐡𝐭 𝐰𝐚𝐲 𝐭𝐡𝐫𝐨𝐮𝐠𝐡 𝐭𝐡𝐞 𝐦𝐨𝐬𝐭 𝐬𝐨𝐮𝐠𝐡𝐭 𝐚𝐟𝐭𝐞𝐫 𝐜𝐨𝐮𝐫𝐬𝐞 - 𝐒𝐐𝐋 𝐂𝐡𝐚𝐦𝐩𝐢𝐨𝐧𝐬 𝐏𝐫𝐨𝐠𝐫𝐚𝐦!

"𝐀 8 𝐰𝐞𝐞𝐤 𝐏𝐫𝐨𝐠𝐫𝐚𝐦 𝐝𝐞𝐬𝐢𝐠𝐧𝐞𝐝 𝐭𝐨 𝐡𝐞𝐥𝐩 𝐲𝐨𝐮 𝐜𝐫𝐚𝐜𝐤 𝐭𝐡𝐞 𝐢𝐧𝐭𝐞𝐫𝐯𝐢𝐞𝐰𝐬 𝐨𝐟 𝐭𝐨𝐩 𝐩𝐫𝐨𝐝𝐮𝐜𝐭 𝐛𝐚𝐬𝐞𝐝 𝐜𝐨𝐦𝐩𝐚𝐧𝐢𝐞𝐬 𝐛𝐲 𝐝𝐞𝐯𝐞𝐥𝐨𝐩𝐢𝐧𝐠 𝐚 𝐭𝐡𝐨𝐮𝐠𝐡𝐭 𝐩𝐫𝐨𝐜𝐞𝐬𝐬 𝐚𝐧𝐝 𝐚𝐧 𝐚𝐩𝐩𝐫𝐨𝐚𝐜𝐡 𝐭𝐨 𝐬𝐨𝐥𝐯𝐞 𝐚𝐧 𝐮𝐧𝐬𝐞𝐞𝐧 𝐏𝐫𝐨𝐛𝐥𝐞𝐦."

𝐇𝐞𝐫𝐞 𝐢𝐬 𝐡𝐨𝐰 𝐲𝐨𝐮 𝐜𝐚𝐧 𝐫𝐞𝐠𝐢𝐬𝐭𝐞𝐫 𝐟𝐨𝐫 𝐭𝐡𝐞 𝐏𝐫𝐨𝐠𝐫𝐚𝐦 -

In this session I have talked about 4 interview questions which were recently asked in pyspark coding interview.

I am sure this session is going to help all the big data enthusiasts.

#bigdata #dataengineering #pyspark

4 Recently asked Pyspark Coding Questions | Apache Spark Interview

PySpark coding questions and answer-4,5,6. #dataengineer #learndatabricks

Most Asked Coding Interview Question (Don't Skip !!😮) #shorts

This SQL Problem I Could Not Answer in Deloitte Interview | Last Not Null Value | Data Analytics

PySpark Full Course [2024] | Learn PySpark | PySpark Tutorial | Edureka

Apache Spark in 60 Seconds

20 Mostly Asked Python Question | Top 10 Python Coding & 10 Theory Questions Asked During Interv...

Pyspark Advanced interview questions part 1 #Databricks #PysparkInterviewQuestions #DeltaLake

Delta Lake : Slowly Changing Dimension (SCD Type2) | Pyspark RealTime Scenario | Data Engineering

Spark Executor Core & Memory Explained

NEVER buy from the Dark Web.. #shorts

TCS Python Interview By TCS Team 2024 ! Real Live Recording ! TCS NQT and Ninja Hiring

The BEST library for building Data Pipelines...

Data Engineering Interview | Apache Spark Interview | Live Big Data Interview

Learn to code in Pyspark in 4 minutes with ChatGPT assistance

What is PySpark | Introduction to PySpark For Beginners | Intellipaat

How to Build ETL Pipelines with PySpark? | Build ETL pipelines on distributed platform | Spark | ETL

1. What is PySpark?

Apache Spark Interview Questions And Answers | Apache Spark Interview Questions 2020 | Simplilearn

What Is Apache Spark?

Spark performance optimization Part1 | How to do performance optimization in spark

Spark create table part 4 #coding #spark #setup #tutorial #apachespark #pyspark#technology

How to Make 2500 HTTP Requests in 2 Seconds with Async & Await

Pyspark coding interview questions & answers #dataengineer #databricksinterview

Комментарии

0:28:39

0:28:39

0:00:26

0:00:26

0:00:32

0:00:32

0:07:48

0:07:48

3:58:31

3:58:31

0:01:00

0:01:00

0:07:07

0:07:07

0:15:39

0:15:39

0:30:46

0:30:46

0:08:32

0:08:32

0:00:46

0:00:46

0:39:10

0:39:10

0:11:32

0:11:32

0:34:03

0:34:03

0:03:52

0:03:52

0:02:53

0:02:53

0:08:32

0:08:32

0:10:13

0:10:13

0:50:31

0:50:31

0:02:39

0:02:39

0:20:20

0:20:20

0:00:59

0:00:59

0:04:27

0:04:27

0:00:26

0:00:26